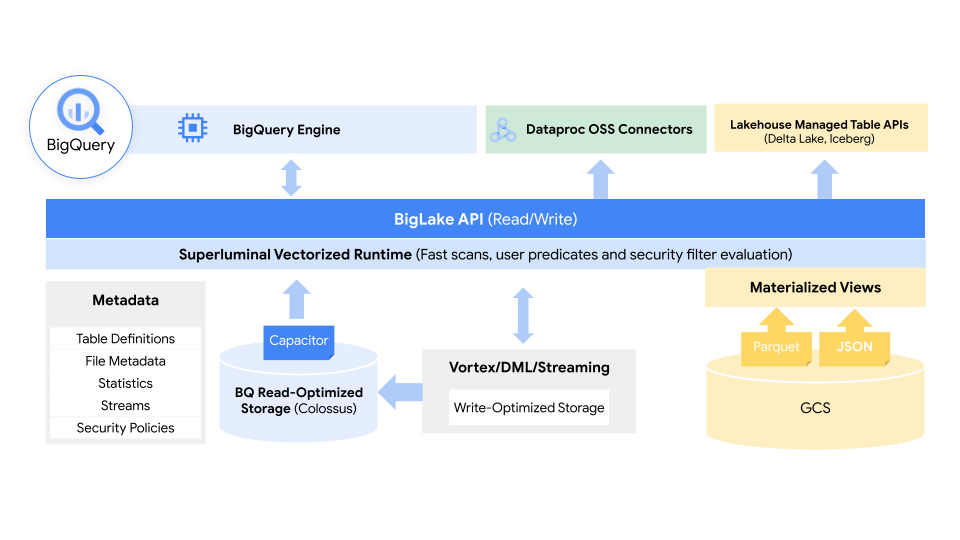

BigLake is a new data lake storage engine that makes it easier for enterprises to analyze the data in their data warehouses and data lakes.

The idea is to combine the best of data lakes and warehouses into a single service that eliminates the underlying storage formats and systems.

This data could be sitting in BigQuery or live on the cloud. BigLake gives developers access to one storage engine and the ability to query the underlying data stores through a single system without the need to move or duplicate data.

Managing data across disparate lakes and warehouses creates silos and increases risk and cost, especially when data needs to be moved.

The image is from the internet search engine, Google.

BigLake allows admins to change their security policies at the table, row and column level. This includes data stored in the cloud, as well as the two supported third-party systems, where the multi-cloud analytics service enables these security controls. The security controls ensure that only the right data is sent into the tools. Additional data management capabilities are provided by the service and the Dataplex tool.

BigLake will provide access controls that are fine-grained, as well as the ability to use Apache Parquet and open-sourced processing engines like Apache Spark.

The image is from the internet search engine, Google.

The volume of valuable data that organizations have to manage and analyze is growing at an incredible rate. When data needs to be moved, silos emerge, creating increased risk and cost, as an organization's data gets more complex. Our customers know they need help.

Changes to a database in real time will soon be possible with a new feature called change streams.

Connected Sheets for Looker, as well as the ability to access Looker data models in its Data Studio BI tool, were launched by the company today.