We have reached the limit of what we can do in the space of a small amount of space, so it is getting harder and harder to improve them. Glass is a startup looking to fundamentally change how the camera works, using a much bigger sensor and an optical trick from the depths of film.

It may not be obvious that cameras will get better since we have seen such advances in recent generations of phones. We used up all the slack left in this line.

To improve the image, you need a bigger sensor, better lens or some kind of computational wizardry. It's not possible for sensors to get much bigger because they need bigger lens to match. There is no room for the lens in the phone body, even when it is the camera. Computational photography is great, but there is only so much it can do, and you reach a point of diminishing returns very quickly.

Glass co-founder and CEO Ziv Attar explained that the limitations used to be about price, but now they are bigger. The other co-founder, Tom Bishop, also worked at Apple and they were working on Portrait Mode.

They just made the lens wider, then they started making the sensor bigger. Night mode takes exposure stacking to extremes, but if you zoom in it starts to look weird and fake.

The phone screen kind of deceives us. There is a lot of work to be done if you can see the difference.

What is that work? The only thing that makes sense to change is the lens, according to Attar. It can get any bigger if you use a normal, symmetrical lens assembly. Why should we? In cinema, they gave up on that constraint a century ago.

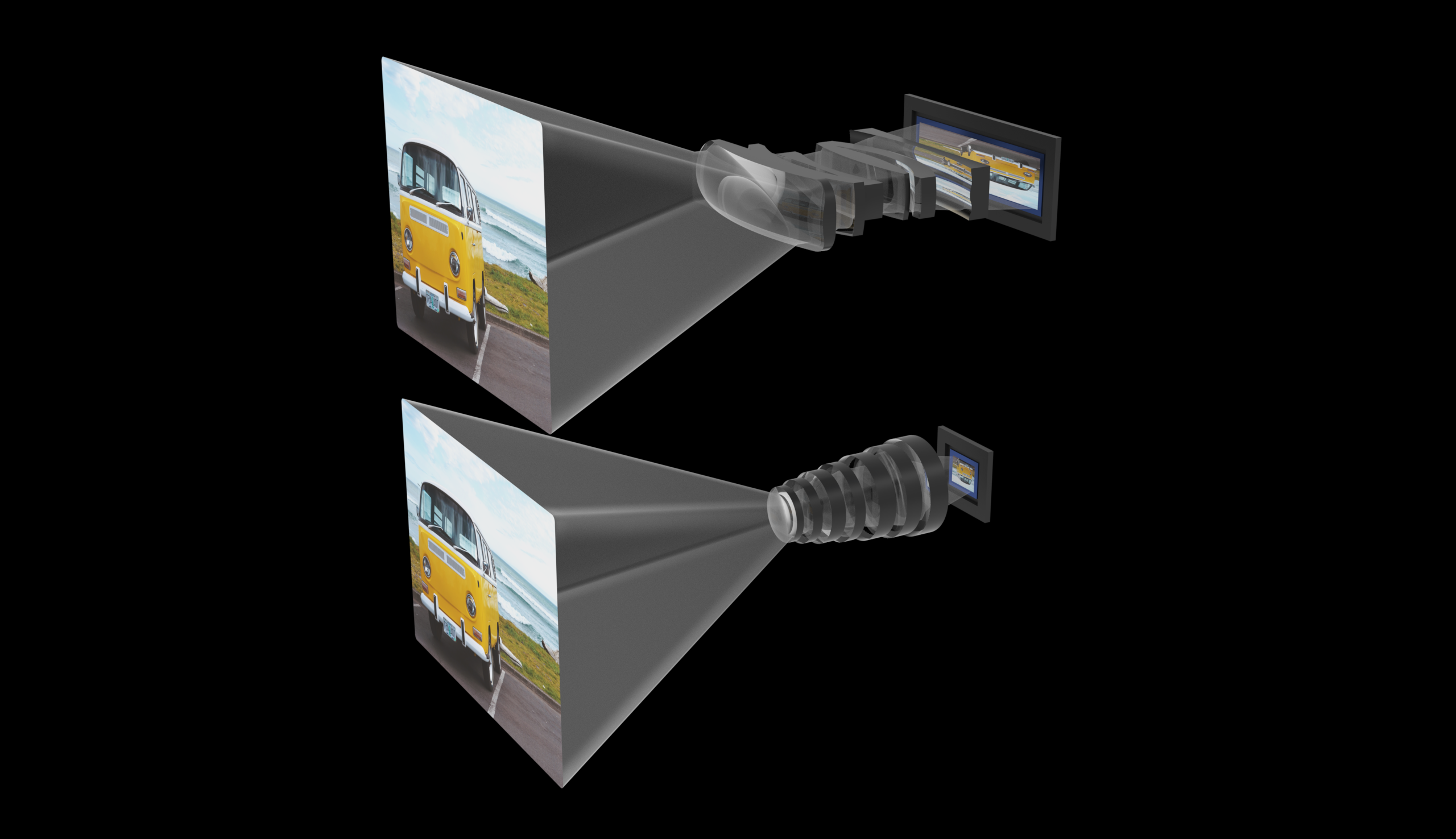

The internal image size is shown in the image with examples of anamorphic and traditional symmetric lens. Glass is the image credit.

Films were not always in a straight line. They used to be more likely to be a 35mm film frame. If you matted out the top and bottom of the film, you could show a widescreen image, which people liked, but you were just zooming in on a part of the film, which you paid for in detail. The problem was solved by a technique that was tested in the 20s.

When projected using an anamorphic projector, the image is stretched back out to the desired aspect ratio because the Anamorphic lens squeezes a wide field of view from the sides. If I describe the optical effects you will never be able to un-see them.

The lens system proposed by Glass uses the same principles and shapes. The idea of how to add a larger sensor was the beginning. We can make a larger square with a larger lens, but what if we made the sensor longer? You would need a longer, rectangular lens as well. The anamorphic technique allows you to capture a larger but distorted image and convert it to the right aspect ratio in the image processor. The process isn't exactly like the film technique, but it uses the same principles.

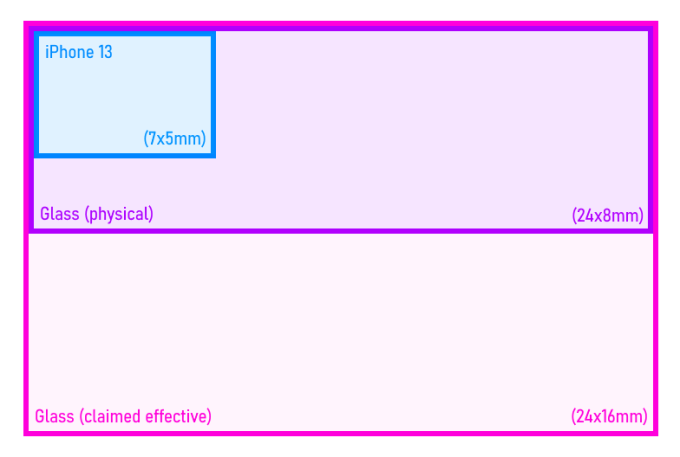

How much larger an image are you able to capture? 35 square millimeter is the sensor size of the main camera of the iPhone 13 The glass prototype uses a sensor that is about 6 times larger than the average. There is a chart for casual reference.

The image was created by Devin Coldewey.

It is an enormous leap to increase a phone's sensor size by 15 or 20 percent.

The way they measure it is even more. It would be twice as tall if you expanded the image to the correct aspect ratio, just shy of theAPS-C standard in DSLRs. That leads to the company's claim that it has 11 times the area of an iPhone. The evaluation of these metrics is a non-trivial process that would make a huge difference for a phone upgrade.

The process has benefits and drawbacks. An increase in light gathering and resolving power is the most important. If you can just see things, you can get better exposures in general and better shots in challenging conditions. There is more detail in images from ordinary phones.

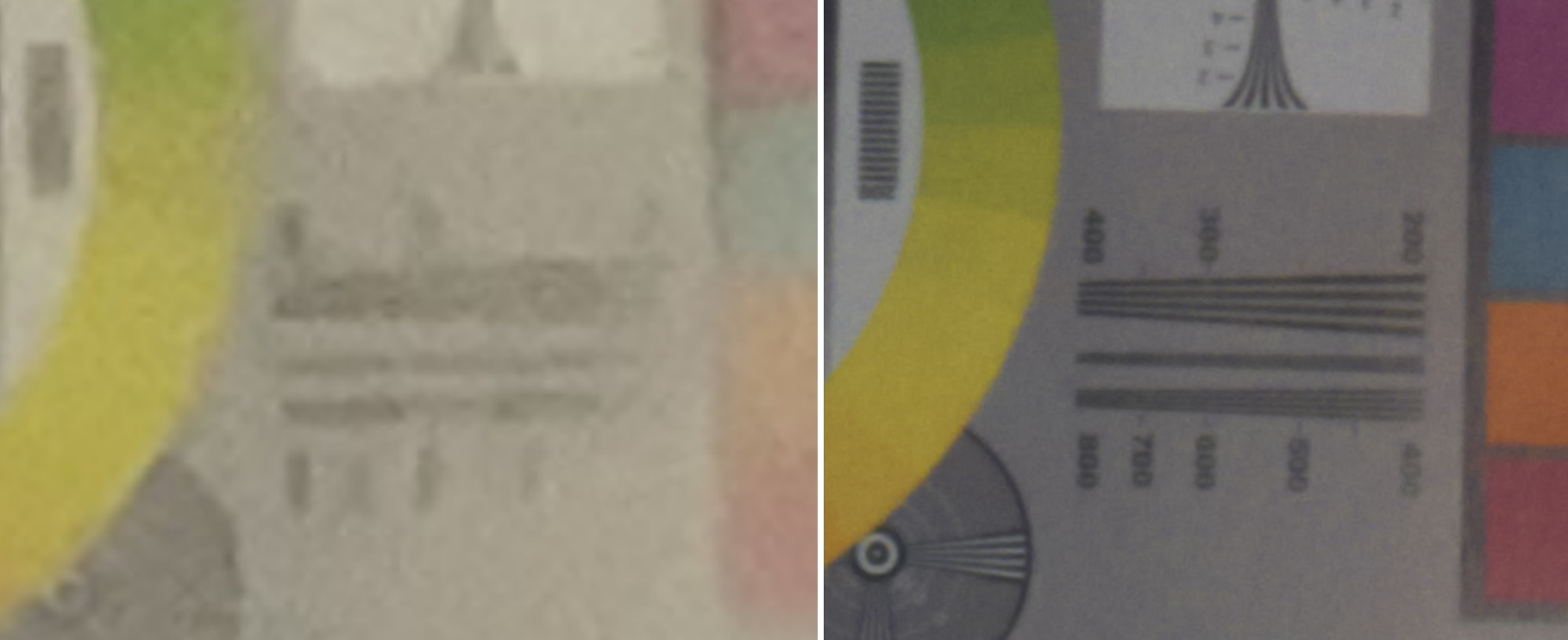

There are images of an iPhone 12 Max and Glass prototype. Glass is the image credit.

It's hard to compare apples-to-apples when the focal lengths, image processing and output resolution are so different. The full-size original images are available here.

An example of a very low-light exposure. Glass is the image credit.

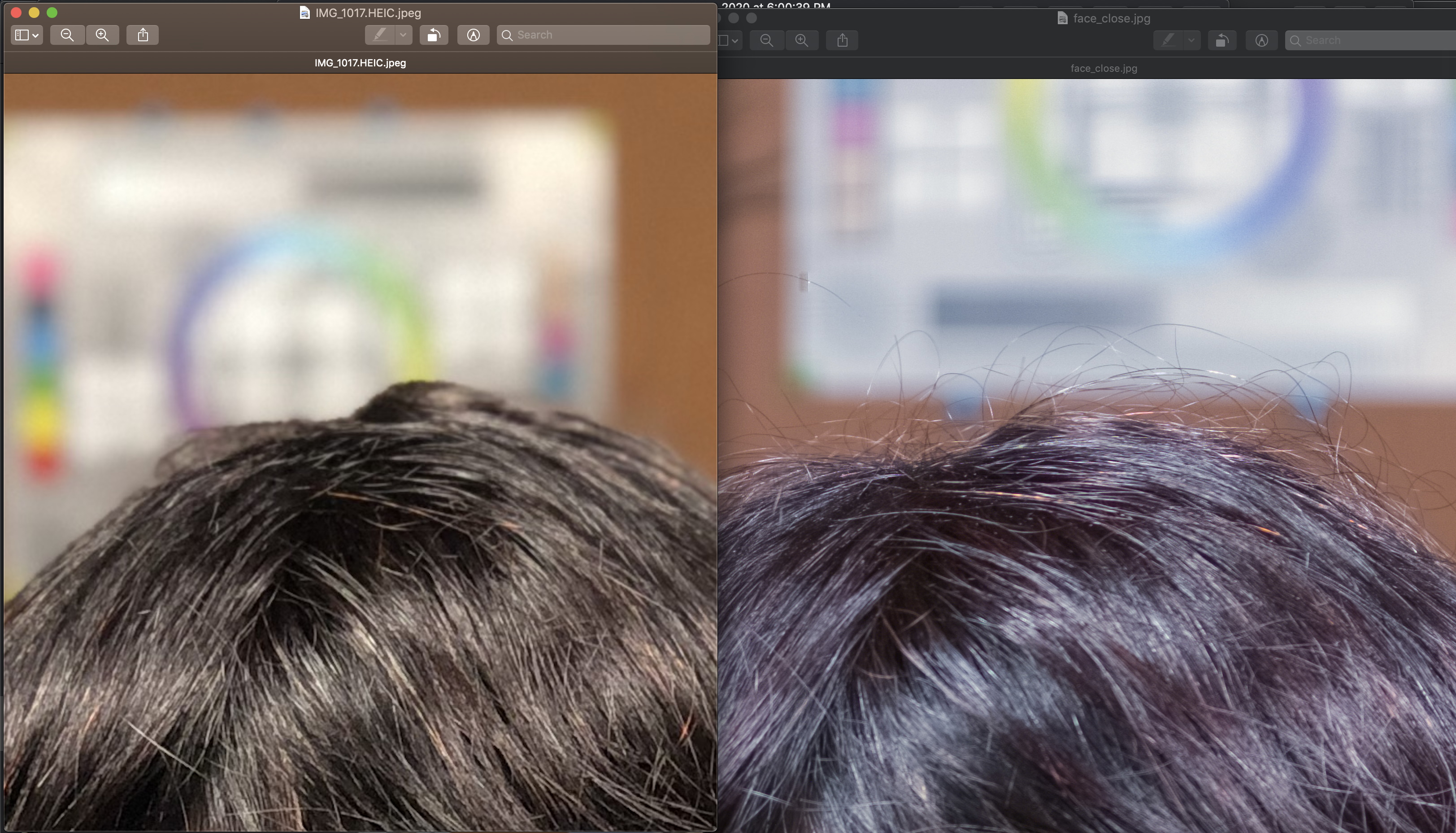

Natural background blur is possible because of the larger sensor and nature of the glass. Portrait mode is a favorite among users, but even the best methods are not perfect. The Glass prototype has the same effect that a larger digital camera would have. There is no chance of the kind of weird mistake you see in the images, which often clip out hair and other details, or fail to achieve the depth effect in subtler ways.

The image on the left shows portrait mode on an Apple device, while the image on the right shows the unprocessed Glass shot, which lacks the smoothing and artifacts of the manipulated one. Glass is the image credit.

While there would be no optical zoom, Attar pointed out that you could zoom in by using a digital zoom on a Glass system. I'm not normally one to let a digital zoom is fine, but in this case the sheer size of the lens and sensor makes up for it.

The benefits are more than significant. It is in front of the best cameras because of the improvement to light and detail. A bad exposure is noticeable at any size, even if the smallest details escape your notice on a small screen.

The challenges of operating a camera that is completely different from a traditional one are the main reason for the drawsbacks. There are a lot of distortions and aberrations that need to be corrected for a symmetrical lens of this size, but of a different type.

Distortions are constrained during design and we know in advance that we can correct them.

The shape of the bokeh is one effect that I find disorientating. In the Glass system highlights blur out into little translucent discs, but in reality they become a series of crescents and ovals.

Glass is the image credit.

It's not right to my neurotic eye. It is unnatural. I can also see the French flags in film and TV. It's common in films shot in anamorphic, but not in still images or selfies.

I assumed there would be drawbacks due to the need to stretch the image digitally, that sort of thing if done poorly can lead to unwanted artifacts. It's easy to train a model to do it so that no one can tell the difference except for peepers. It looks like it came from a fullAPS-C sensor after we applied our algorithm.

The theory seems sound and the early results are promising, but it will have to be verified by reviewers and camera experts.

The company has moved on from the prototypes to a third-generation phone factor device that shows how the tech will fit into the market. Although it won't be as cheap to manufacture as today's off-the-shelf camera and image processing units, it can be made just as easily. If one company can make a huge leap in camera quality, they can capture a large chunk of the market.

Attar said that they have to convince a phone maker to ditch the old technology. The only challenge is to do it quickly. I am not saying there is no risk. We didn't leave our fancy salaries at Apple to work on some BS thing because we had good jobs at big companies. We had a plan from the beginning.

It would take at least a year and a half or two years to get to market.

LDV Capital and a group of angel investors led the seed funding for Glass. It is not meant to cover the cost of manufacturing, but the company is leaving the lab and will need operating cash to continue. Greg Gilley, formerly Apple's VP of cameras and photos, and MIT Media lab's Ramesh Raskar joined as advisors, rounding out a team investors are likely to have a lot of confidence in.

If the Glass approach catches on, other companies will claim to have invented it in less than two years.