Thanks to new machine learning techniques, Microsoft's translation services will be able to improve translations between a large number of language pairs. These new models are often better than the company's previous models during blind evaluations, based on the Project Z-Code approach. Z-Code is part of Microsoft's wider initiative that looks at combining models for text, vision and audio across multiple languages to create more powerful and helpful artificial intelligence systems.

It isn't a completely new technique, but it is useful in the context of translation. The system breaks down tasks into sub tasks and then delegates them to different models, called experts, based on their own predictions. It is a model that includes multiple more specialized models.

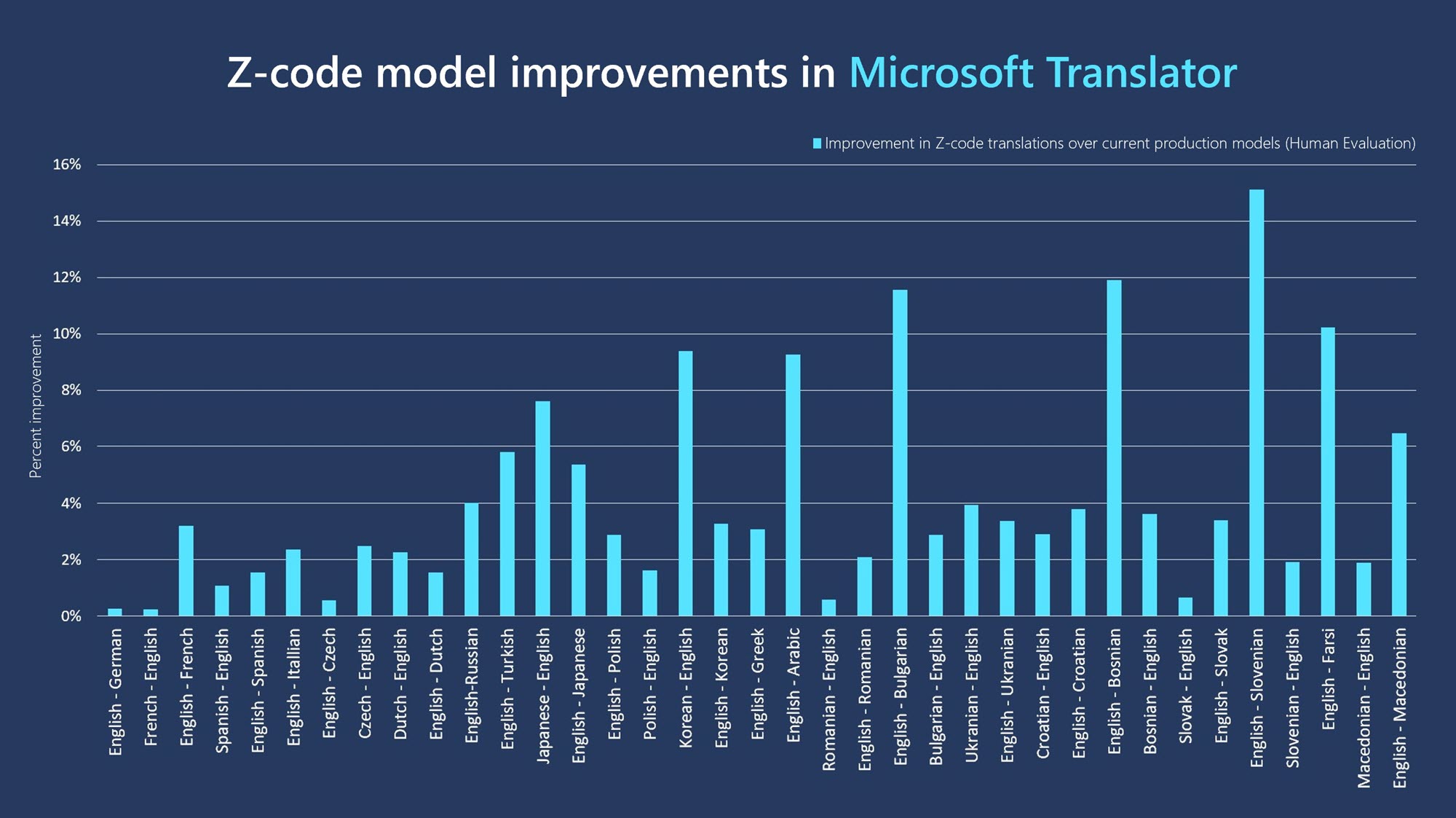

A new class of Z-Code Mixture of Experts models is helping to improve the performance of Translator. The image is from Microsoft.

Z-Code is making amazing progress because we are using both transfer learning and multitasking to create a state-of-the-art language model that we believe has the best combination of quality, performance and efficiency.

The result of this is a new system that can translate between 10 languages, eliminating the need for multiple systems. Z-Code models are being used by Microsoft to improve other features of its artificial intelligence systems. This is the first time it has used this approach for a translation service.

It is hard to bring large translation models into a production environment. The Microsoft team decided to use a sparse approach, which only activated a small number of model paramters per task.