Academic researchers have come up with a new way to take control of Amazon's smart speakers and force them to open doors, make phone calls, and make unauthorized purchases.

The device's speaker is used to issue voice commands. If the speech contains a wake word, followed by a permissible command, the Echo will carry it out. It's trivial to add the word "yes" to the command after six seconds, even if it requires verbal confirmation. Attackers can exploit the full voice vulnerability, which allows them to make self-issued commands without temporarily reducing the device volume.

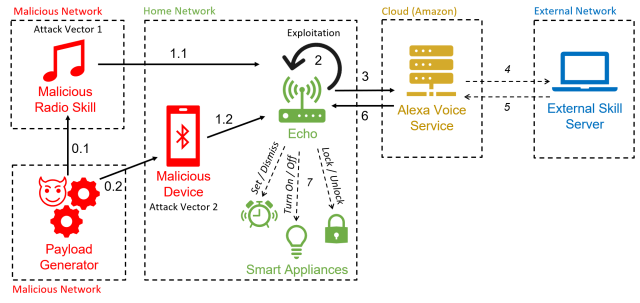

The researchers have dubbed the hack "AvA" because it uses the Alexa feature to force devices to make their own commands. It requires a few seconds of proximity to a vulnerable device while it is turned on so an attacker can utter a voice command instructing it to pair with an attacker's device. The attacker will be able to issue commands if the device remains within the radio range.

The researchers wrote in a paper published two weeks ago that the attack was the first to exploit the vulnerability of self-issuing arbitrary commands.

AdvertisementA variation of the attack uses a malicious radio station. The security patches that Amazon released in response to the research made that attack impossible. The attacks work against 3rd and 4th generation devices.

AvA begins when a vulnerable Echo device connects to the attacker's device via a malicious radio station. The attacker can use a text-to-speech app to stream voice commands. There is a video of AvA. The attack is still viable with the exception of what is shown between 1:40 and 2:14.

The researchers found that AvA could be used to force devices to carry out a number of commands. There are possible malicious actions.

The researchers wrote about it.

With these tests, we demonstrated that AvA can be used to give arbitrary commands of any type and length, with optimal results—in particular, an attacker can control smart lights with a 93% success rate, successfully buy unwanted items on Amazon 100% of the times, and tamper [with] a linked calendar with 88% success rate. Complex commands that have to be recognized correctly in their entirety to succeed, such as calling a phone number, have an almost optimal success rate, in this case 73%. Additionally, results shown in Table 7 demonstrate the attacker can successfully set up a Voice Masquerading Attack via our Mask Attack skill without being detected, and all issued utterances can be retrieved and stored in the attacker’s database, namely 41 in our case.