The UK's biggest crisis text line gave third-party researchers access to millions of messages from children and other vulnerable users despite a promise never to do so.

The Royal Foundation of the Duke and Duchess of Cambridge invested 3 million dollars into Shout, a helpline that offers a confidential service for people struggling to cope with issues such as suicidal thoughts, self-harm, abuse and bullied.

An FAQ section on its website said that high-level data from messages was passed to trusted academic partners for research to improve the service.

Access to conversations with millions of people, including children under 13 years old, has been given to third-party researchers after the promise was deleted from the site.

The charity that runs the helpline said all users agreed to terms of service that allowed data to be shared with researchers for studies that would benefit those who use the service.

Privacy experts, data ethicists and people who use the helpline said the data sharing raised ethical concerns.

A project with Imperial College London used more than 10 million messages from conversations between February and April 2020 to create tools that use artificial intelligence to predict suicidal thoughts.

Shout says that the names and phone numbers of the people were removed before the messages were analysed.

The data used in the research included full conversations about people's personal problems.

To get a better understanding of who was using the service, part of the research was to get personal information from the texters, such as their age, gender and disability.

Cori Crider is a lawyer and co- founder of Foxglove, an advocacy group for digital rights. When you say in your FAQ that individual conversations cannot and will not ever be shared, you leave behind hundreds of thousands of conversations. Lack of trust in these systems can make people hesitant to come forward.

People using the service could not comprehend how their messages would be used at a crisis point, even if they were told it was going to be used in research, because of their vulnerable state. According to Shout, 40% of people texting the helpline are suicidal.

The woman who used the service for support with anxiety and an eating disorder said she had no idea messages could be used for research.

Maillet said she understood the need to conduct research in order to make services better.

She said using conversations from a crisis helpline raised ethical concerns even when names were removed.

You can spin it in a way that makes it sound good, but will it be used for research?

Phil Booth is the co-ordinator of medConfidential, which campaigns for the privacy of health data. These other uses are not what people would expect.

He said that the charity had told users that their individual conversations would not be shared.

Shout was set up as a legacy of Heads Together, a campaign led by the Duke and Duchess of Cambridge and Prince Harry aimed at ending stigma around mental health. The aim of the service is to provide round-the-clock mental health support to people in the UK who can text a number and receive a response from a trained volunteer.

A key goal is to useonymised, aggregated data to generate unique insights into the mental health of the UK population.

We use these insights to enhance our services, to inform the development of new resources and products, and to report on trends of interest for the broader mental health sector.

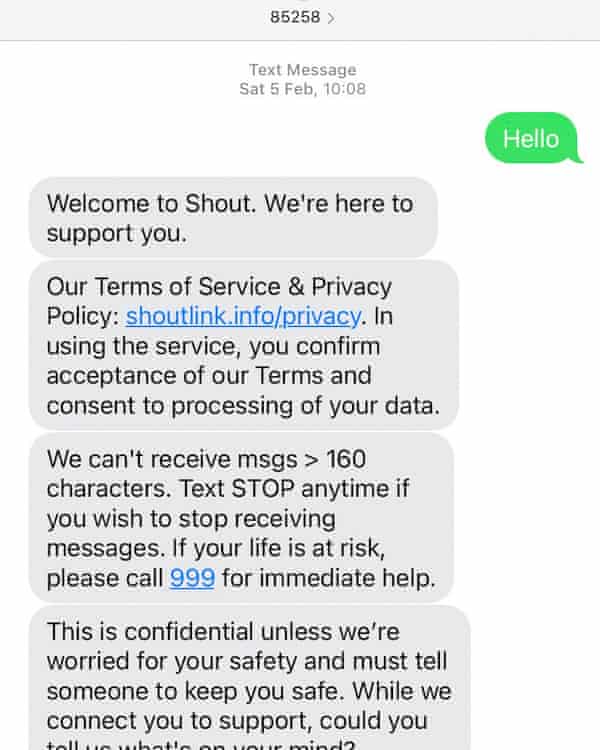

Shout tells service users that this is confidential unless they are worried for their safety and must tell someone to keep them safe.

The previous versions of the FAQ made a promise that has since been deleted, meaning that people who text the service in the past are likely to have read information that was later changed.

In the FAQ section of the helpline's website, users were told to anonymise and aggregate high-level data from conversations in order to study and improve their own performance. We share some of this data with carefully selected and screened academic partners, but the data is always anonymised, individual conversations cannot and will not ever be shared.

The promise about individual conversations was removed from the FAQ section. The privacy policy was updated to say that it could be used to enable a deeper understanding of the mental health landscape in the UK, opening the door to a broader range of uses.

According to the paper, the Imperial study involved analysis of entire conversations manually and using artificial intelligence. To improve the service, it had three goals: predicting the conversation stages of messages, predicting behaviours from texters and volunteers and classifying full conversations to get demographic information.

We aim to train models to classify labels that could be used to measure conversation efficacy. Predicting a texter's risk of suicide is a key future aim.

Data was handled in a trusted research environment and the study received ethics approval from Imperial, which said the research complied with ethical review policies.

As a researcher she saw a lot of red flags with the secondary data use, and she is an expert on the effects of technology on children and young people.

Dr Christopher Burr is an ethics fellow and expert in artificial intelligence at the Alan Turing Institute. He said that not being clear with users about how sensitive conversations would be created a culture of distrust.

He said that they should be doing more to make their users aware of what is going on.

The data-sharing partnership between an American mental health charity and a for-profit company was exposed by a news site in the US.

Shout is the UK counterpart of Crisis Text Line US, which faced intense scrutiny earlier this month over its dealings with a company that promises to help companies handle their hard customer conversations.

The UK users of the Crisis Text Line were reassured that their data had not been shared with Loris after details of the partnership came to light.

Mental Health Innovations has formed partnerships with private bodies including Hive Learning.

Hive worked with Shout to create an artificial intelligence-powered mental health programme that the firm sells to companies to help them sustain mass behavior change. The UK's leading digital venture builder is owned by the Shout Trustee.

Mental Health Innovations said there was no data relating to any texter conversations and that its training programme was developed with learnings from its volunteer training model.

In a statement, the charity claimed that all data shared with academic researchers, including Imperial, had been removed.

It said that it would be difficult for many children and young people to use the service if they had to seek parental consent. If they withdrew their consent, they could text their information to the hotline and have it deleted.

The data from Shout conversations was unique because it contained information about people in their own words.

She said the promise about individual conversations never being shared was in the FAQ, rather than the privacy policy, and that it had been removed from the website because it could be misinterpreted.

The UK's data protection watchdog, the Information Commissioner's Office, said it was assessing evidence relating to Shout and Mental Health Innovations.

When handling and sharing people's health data, especially children's data, organizations need to take extra care and put safeguards in place to ensure their data is not used or shared in ways they wouldn't expect. We will look at the information and make a decision.

Samaritans can be reached in the UK and Ireland by email or phone.