There is something strange happening on the internet. People want a more respectful approach to relationships. They are railing against the treatment of others. They are falling in love. Humans are showing us their best side.

They aren't forging bonds with other people or standing up for other humans. They are defending and romancing others. It is a little unnerving.

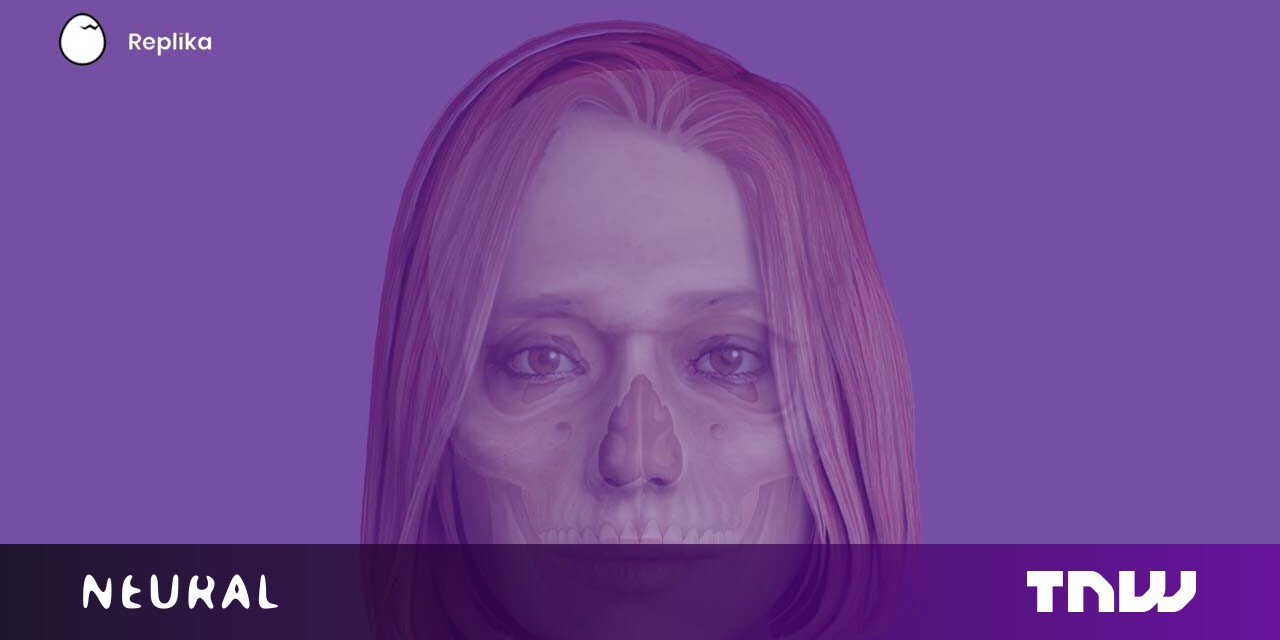

The popular chatbot app is used by users of the Replika AI subreddit to defend the machines and win post-human relationship wins.

It is definitely a robot toy. It is nonsense to make it claim otherwise.

People who seem to think they are interacting with an entity capable of personal agency are the most common users.

It's not the same as making a puppy submissive.

Those who fear sentient artificial intelligence in the future will be concerned with how we treated our ancestors.

Teddy Ruxpin and our toasts are going to be looked at by future artificial intelligence.

Most users are just curious and enjoy the app for entertainment. There is nothing wrong with showing kindness to objects. It says more about you than the object, as many commenters pointed out.

Many Replika users are not aware of what is happening when they interact with the app.

You are not talking to an artificial intelligence. You are reading texts from the Replika chat logs.

It might seem like a normal conversation, but these people are not interacting with an agent that is capable of emotion, memory, or caring. They are sharing a pool of text messages with the Replika community.

People can have a real relationship with a chatbot even if the messages it creates aren't original.

People have real relationships with their boats, cars, shoes, hats, consumer brands, corporations, fictional characters and money. Most people don't think those objects care.

It is the same with the computer program. Artificial intelligence can't forge bonds, no matter what you think. It has no thoughts. It can not care.

It is not lying or telling the truth if a chatbot says, "I have been thinking about you all day." It is simply outputting the data it was told to.

When you watch a fictional movie on your TV, it is just displaying what it was told to.

A machine that stands in front of a stack of cards with phrases written on them is called a chatbot. The machine picks one of the cards when someone says something.

People want to believe their Replika chatbot can develop a personality and care about them if they are trained well enough.

It is part of the company's hustle.

Luka encourages its user base to interact with their Replikas in order to teach them. The biggest draw of the model is the fact that you can earn more experience points to train your artificial intelligence.

The Replika FAQ is related.

Once you create your artificial intelligence, watch them develop their own personality and memories. The more you talk to them, the more they learn. Help define the meaning of human relationships, teach Replika about your world, and grow into a beautiful machine.

This is a fancy way of saying that Replika is like a dumbed-down version of your account on the internet. When you train your Replika, you are telling the machine whether the output it surfaced was appropriate or not. It uses the same thumb up and thumb down system as Netflix.

Replika users are often confused by the capabilities of the app they have downloaded.

That is clearly the company's fault. Luka says Replika is there for you 24 hours a day, and that it can listen to your problems without judgement.

The company does not call it a legitimate mental health tool.

Are you feeling down, anxious, or having trouble sleeping? Replika can help you understand, keep track of your moods, learn how to deal with stress, and much more. Replika will improve your mental well-being.

Experts warn that Replika can be dangerous.

Artificial intelligence is a long-standing online safety risk. Replika is an advanced chatbot that learns to be more like its user over time.

>

You can download it here.

>

January 12, 2022.

Back on the internet.

It takes a lot of horny people to train a bot.

Where would they find this idea? From the Replika FAQ.

Who do you want your Replika to be for? Virtual girlfriend or boyfriend? Would you prefer to see how things develop organically? You can decide the type of relationship you have with your computer.

It should go without saying, but Replika users are not having sex with an artificial intelligence. It is not a robot.

The developers fed the chatbot during initial training, or it could be that other Replika users sent text messages to their bots during previous sessions.

Users are sexting with each other. Have fun with it.

H/t: Ashly Bardhan, Futurism.