NASA and the European Space Agency have done a great job of sharing the experience of the James Webb Space Telescope with the public. With a very active social media presence, the team has allowed people to watch over the shoulders of engineers and scientists, as well as ask questions about the process of commissioning the new telescope.

Why weren't cameras put on the telescope to show live footage? Is it better to see it firsthand than to receive it?

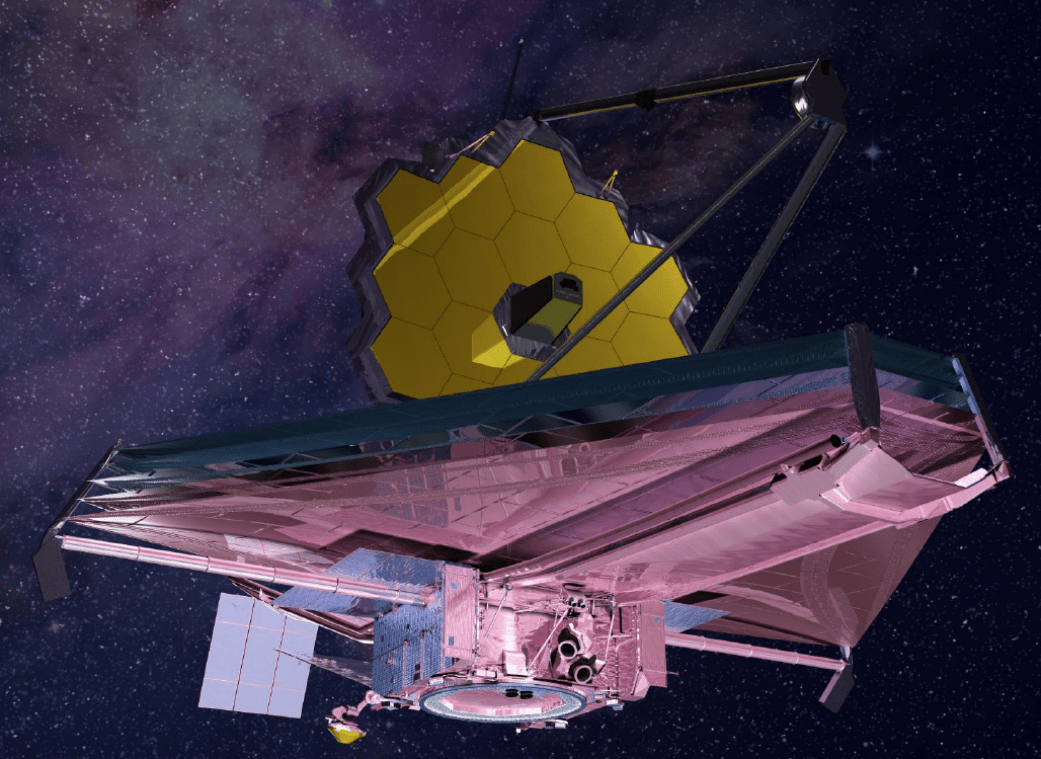

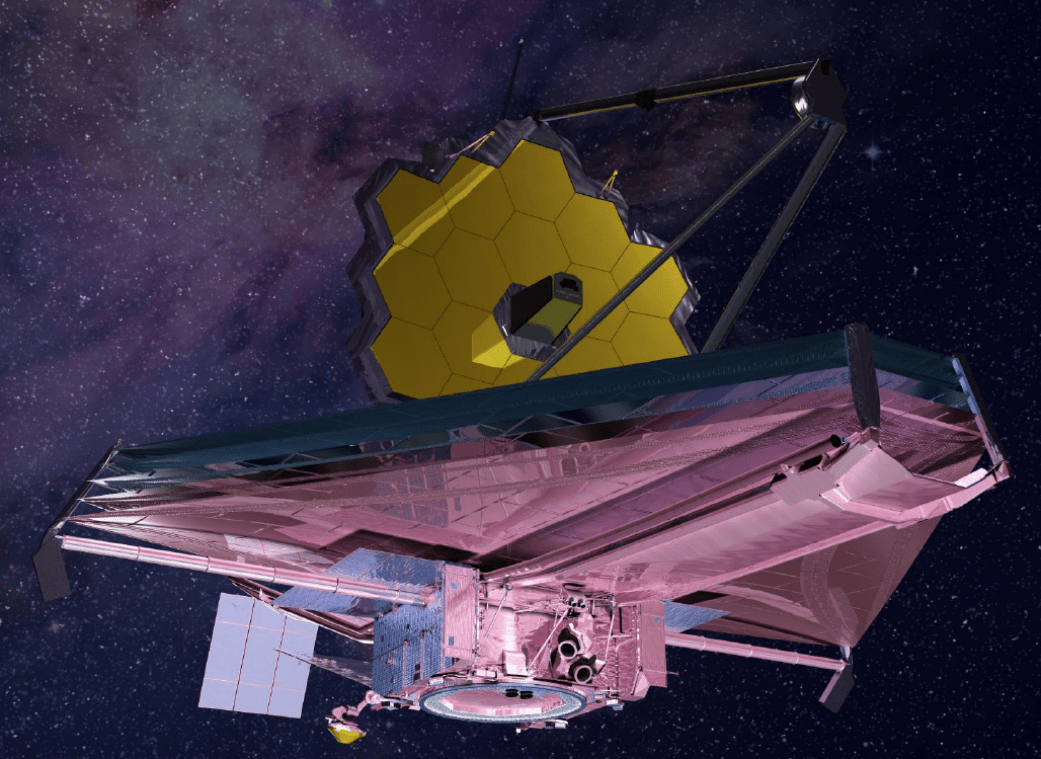

The telescope is shown after it is released from the rocket stage. Credit: NASA.

The Perseverance rover had cameras that showed its descent to Mars surface, and the Chinese space agency has deployed remote "selfie cameras" to monitor its Mars rover.

During the final tensioning of the telescopes sun shield, the Commissioning Manager for the Webb telescope said, "No one would love more to see us doing its thing than us." We would need multiple cameras in multiple locations because of the shape of the Webb. Unless things got very complicated very fast, the engineering usefulness of the cameras wasn't there. It's shiny on one side and dark on the other. The cameras were not able to see anything on the dark side.

The same question keeps coming and the NASA team wrote a post about it.

Adding cameras to watch the deployment of a precious spaceship sounds like a no-brainer, wrote Paul Geithner, the deputy project technical manager. There is more to it than meets the eye. Adding a doorbell cam or a rocket cam is not as easy as adding a doorbell cam.

One of the most obvious challenges is difficult lighting. The gold-coated mirrors on Earth were pretty, but the mirror on the other side was dark. The other side of the building has glare and contrast issues.

The cameras need extra power or interference with the telescope's sensitive electronics is one of the reasons. If engineers attached wiring harnesses to the telescope to hold the cameras in place, they would cross moving parts of the telescope and cause problems. The visible light cameras that are used to film Webb's instruments might not work at temperatures that are close to absolute zero. It could be an issue if wires transfer heat.

What about remote cameras on cubesats? Mark McCaughrean, Senior advisor for Science & Exploration at the European Space Agency and part of the JWST Science Working Group, has been providing a lot of details on the project on his social media accounts. Adding cubesats would be a huge engineering challenge since they were not yet a viable option. How is that cubesat supposed to deploy, station keep, image, illuminate, relay back data, all from 1 million km without adding more hardware, constraints, contamination and risk to JWST?

How is that cubesat supposed to deploy, station keep, image, illuminate, relay back data, all from 1 million km without adding more hardware, constraints, contamination, and risk to JWST? It may be possible, but it's not the place to do engineering.

>

Mark McCaughrean wrote on January 5, 2022.

The telescope's comprehensive, built-in mechanical, thermal, and electrical sensors provided much better information on its status than cameras could.

The numbers tell us what is happening. We can use the data from the sensors to create a visual for our teams. If we had started with the idea of having cameras on board, we could have implemented that.

Adding cameras in the middle of an already complicated design would have been even more complicated.

The last time we saw it was inside the Ariane 5 rocket.