The doctored images are getting harder to detect, and the Deepfakes are being used for a variety of purposes.

A new tool gives a simple way of spotting them by looking at the light reflected in their eyes.

The computer scientists at the University at Buffalo created the system. The tool was effective at detecting Deepfake images in portrait-style photos.

How do you build a pet friendly device? We asked experts and animal owners.

The fakes are exposed by analyzing the corneas, which have a mirror-like surface.

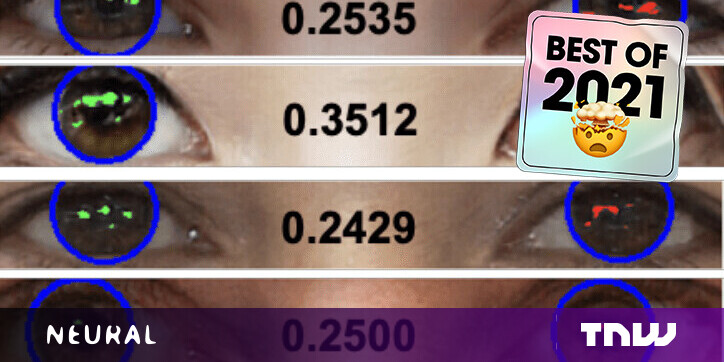

In a photo of a real face taken by a camera, the reflection on the two eyes will be the same as they are seeing. Deepfake images synthesized by GANs fail to capture the resemblance.

They often show inconsistencies, such as different geometric shapes or different locations of the reflections.

Lyu et. al were credit.

The Deepfake image shows the differences between the corneas and they are likely caused by combining many photos.

The light reflected in each eyeball is analyzed by the artificial intelligence system.

A similarity metric is generated by it. The face is more likely to be a Deepfake if the score is smaller.

The system was highly effective at detecting Deepfakes taken from This Person Does Not Exist, a repository of images created with the StyleGAN2 architecture. The study authors acknowledge that it has limitations.

The tool relies on a reflected source of light in both eyes. If one eye isn't visible in the image, the method won't work and the inconsistencies can be fixed with manual post-processing.

It is only effective on portrait images. The system would likely produce false positives if the face in the picture isn't looking at the camera.

The researchers will investigate these issues to improve their method. It could still spot many of the cruder Deepfakes, even though it isn't going to detect the most sophisticated ones.

The study paper can be read on the arXiv pre-print server.

Greetings Humanoids! Did you know that we have a newsletter? You can subscribe here.