Apple introduced a new Messages Communication Safety option in iOS 15.2 beta. It's intended to make children safer online and protect them from potentially dangerous images. There has been a lot of confusion about this feature and we thought it might be useful to give an overview of the process and clarify any misconceptions.

Communication Safety Overview

Communication Safety is designed to protect minors from inappropriate content in unsolicited photos.

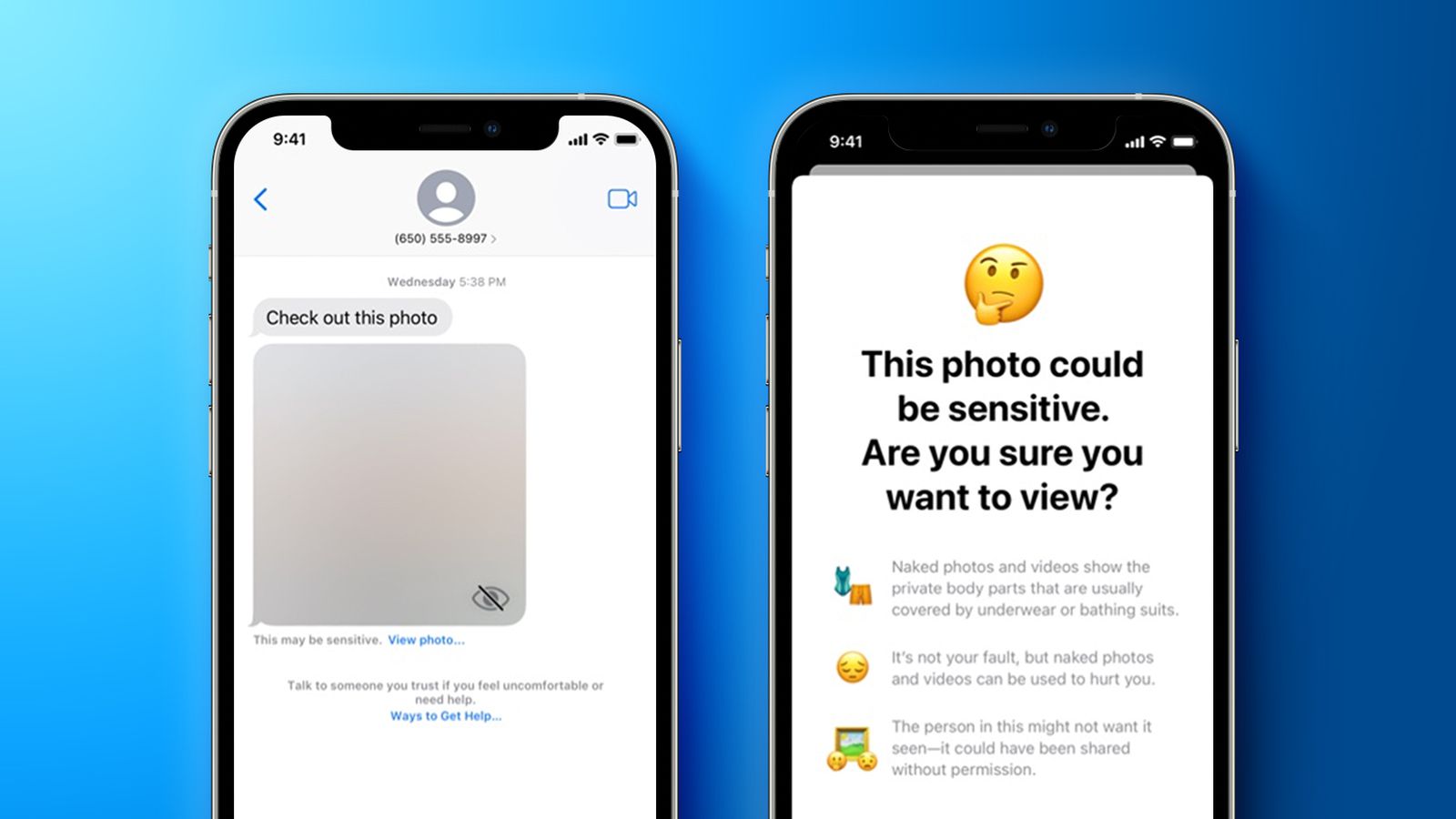

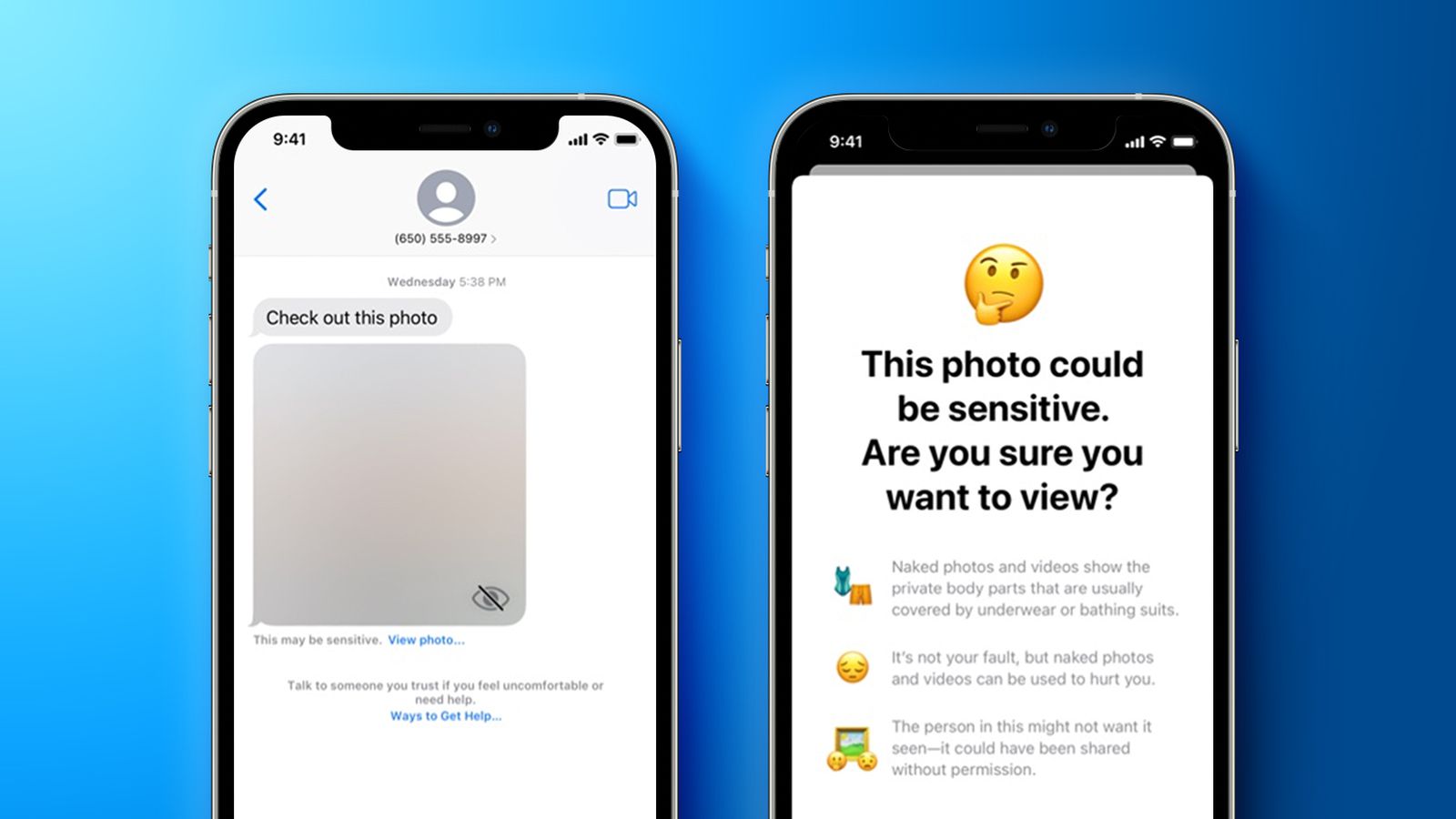

Apple explains that Communication Safety detects nudity in children's photos. If nudity is detected in a photo, it is blurred.

When a child taps the blurred image, it is informed that the image is sensitive and shows "body parts that are usually covered with underwear or bathing suit." This feature informs children that nudity photos can be "used for hurting you" and that permission is required to share them.

Apple gives children the option to message a trusted adult in their lives for help. Two screens are available that allow children to tap through to see the reasons why they might not wish to view a nude image. However, a child can choose to view the photo regardless. Apple isn't restricting content access, but rather providing guidance.

Communication Safety is entirely opt-in

Communication Safety will become an opt-in feature in iOS 15.2 when it is released. It will not automatically be enabled, so users will have to explicitly turn it on.

Communication Safety is Important for Children

Family Sharing features enable communication safety, which is a parental control option. Family Sharing allows parents to control the devices of their children under 18 years old.

After updating to iOS 15, parents can opt into Communication Safety using Family Sharing. Communication Safety is available only on devices that are set up for children under 18 years old and are part of a family sharing group.

Children younger than 13 years old cannot create an Apple ID. Parents must use Family Sharing to create accounts for their children. If a parent is available to supervise, children over 13 years old can still create an Apple ID. However, they can still be invited into a Family Sharing group.

Apple uses the birthday entered at account creation to determine the age of the Apple ID owner.

Communication safety cannot be enabled on adult devices

The Family Sharing feature is only available to Apple ID accounts that are under 18 years old. There is no way to activate Communication Safety on an adult's device.

Adults don't need to worry about Messages Communication Safety unless their children are using it. A Family Sharing group that includes adults will not have a Communication Safety option. Also, no scanning of photos in Messages will be done on an adult's device.

Messages remain encrypted

Communication Safety does NOT compromise the encryption used in the Messages app for iOS devices. Messages are encrypted in their entirety and not sent to anyone or to Apple.

Apple does not have access to the Messages app for children's smartphones, and Apple is not notified if Communication Safety has been enabled.

Everything can be done on-device and nothing leaves the iPhone

Images sent and received through Messages are scanned using Apple's machine-learning and AI technology to check for nudity. The scanning is performed entirely on the device. No content from Messages is sent anywhere to Apple's servers.

This technology is very similar to that used by the Photos app to identify pets, people and food. The device also does all of this identification.

Apple introduced Communication Safety in August. It had a feature that alerted parents if their children chose to view a nude photograph after they were warned. This feature has been removed.

Parents will not be notified if a child views a nude photograph and is not warned. The child has full control over the situation. Apple has removed this feature following criticisms from advocacy groups who feared it could pose a problem in cases of parental abuse.

Communication Safety is not Apple's AntiCSAM Measure

Apple first announced Communication Safety in August 2021. It was part of a series of Child Safety features, which also included an antiCSAM initiative.

Apple's antiCSAM plan, which Apple claimed would allow it to detect Child Sexual Abuse Material in iCloud has never been implemented. It is completely separate from Communication Safety. Apple made a mistake by introducing these features together, as they have nothing in common except that they are both under Child Safety.

Apple's anti-CSAM measures have been met with much criticism. Photos uploaded to iCloud will be scanned against a list of child sexual abuse material. Apple users are not happy about the idea of photo scanning. Apple's technology to scan photos and match them with known CSAM may be extended to include other types of material.

Apple has delayed anti-CSAM plans in response to criticism and is changing how it will be implemented. iOS currently does not have anti-CSAM functionality.

iOS 15.2 includes Communication Safety, which is currently available in beta. Developers and members of Apple's beta-testing program can download the beta. iOS 15.2 is not yet available for download.

Apple will provide additional information on Communication Safety in new documentation when iOS 15.2 is released.