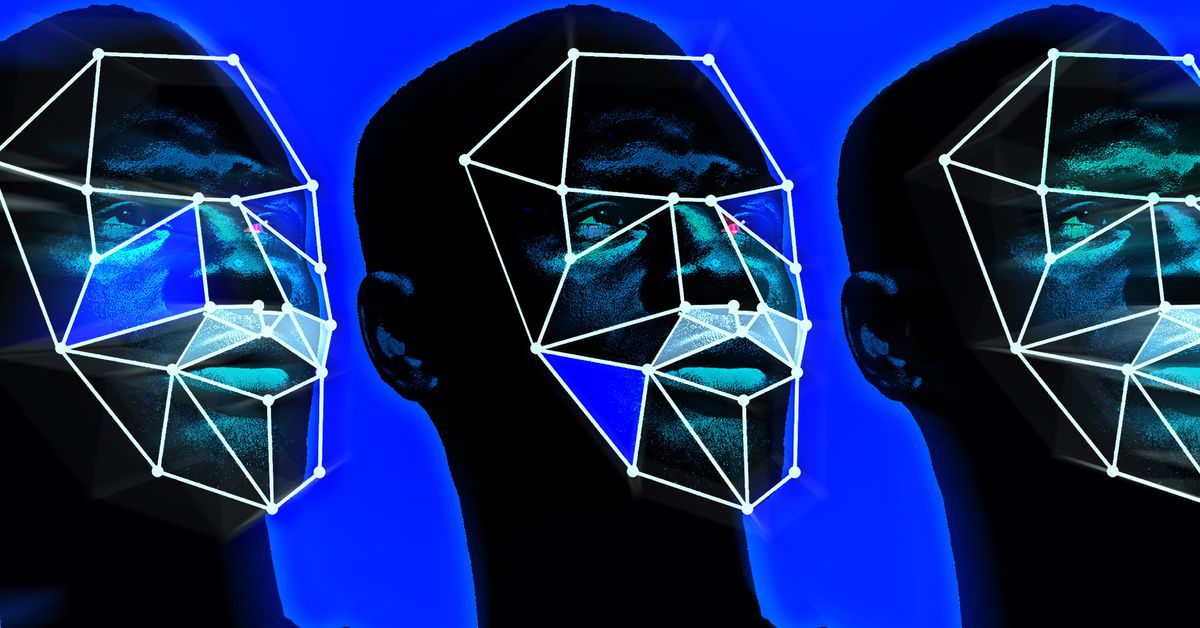

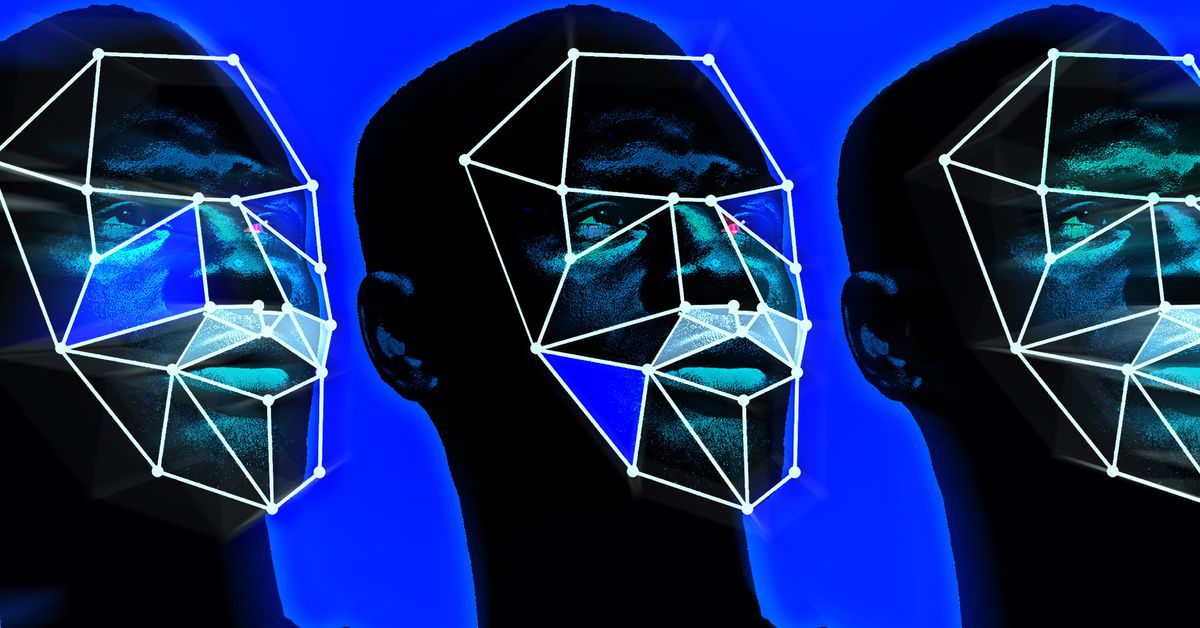

Clearview AI, a controversial facial recognition company, has been ordered by Australia's national privacy regulator to destroy all facial templates and images belonging to Australians.

Clearview claims it has scanned 10 billion photos of people on social media to help identify them in other photos. Clearview sells its technology to law enforcement agencies. The Australian Federal Police (AFP), tested it between October 2019 - March 2020.

The Australian privacy regulator, the Office of the Australian Information Commissioner, has now concluded that the company violated citizens privacy. Angelene Falk, OAIC privacy commissioner, stated that the covert collection of such sensitive information was unreasonably intrusive. Clearview AIs database can search for images of victims and children.

Australians don't expect their facial photos to be taken without their consent when they use social media and professional networking sites.

Falk said: Australians don't expect their facial photos to be collected by commercial entities to create biometric templates. These templates can be used for totally unrelated identification purposes. All Australians may feel that they are under surveillance, and the indiscriminate collection of their facial images could negatively impact their personal freedoms.

Clearviews' practices were investigated by the OAIC in collaboration with the UKs Information Commissioners Office. The ICO has not yet made a decision on Clearviews legal work in the UK. According to the agency, it is evaluating its next steps and taking any regulatory action required by UK data protection laws.

Clearview intends to appeal this decision, according to The Guardian. Clearview AI is legally operating according to the laws in its place of business, Mark Love, a lawyer representing Clearview at BAL Lawyers, stated to The Guardian. The commissioner's decision on Clearview AI's operation was not correct. He also lacks jurisdiction.

Clearview claims that the images it took were made public, which means that there was no privacy breach. Also, they were published in the US so Australian law doesn't apply.

Global discontent is rising over the widespread use of facial recognition systems. These systems threaten anonymity in public places. Yesterday, Meta, the parent company of Facebook announced that it would shut down the facial recognition feature on social media platforms and delete the facial templates it had created for it. Meta announced yesterday that it was closing down the facial recognition feature of Facebook and deleting the facial templates it created for the system. The tech had been found to have violated privacy laws in Illinois.