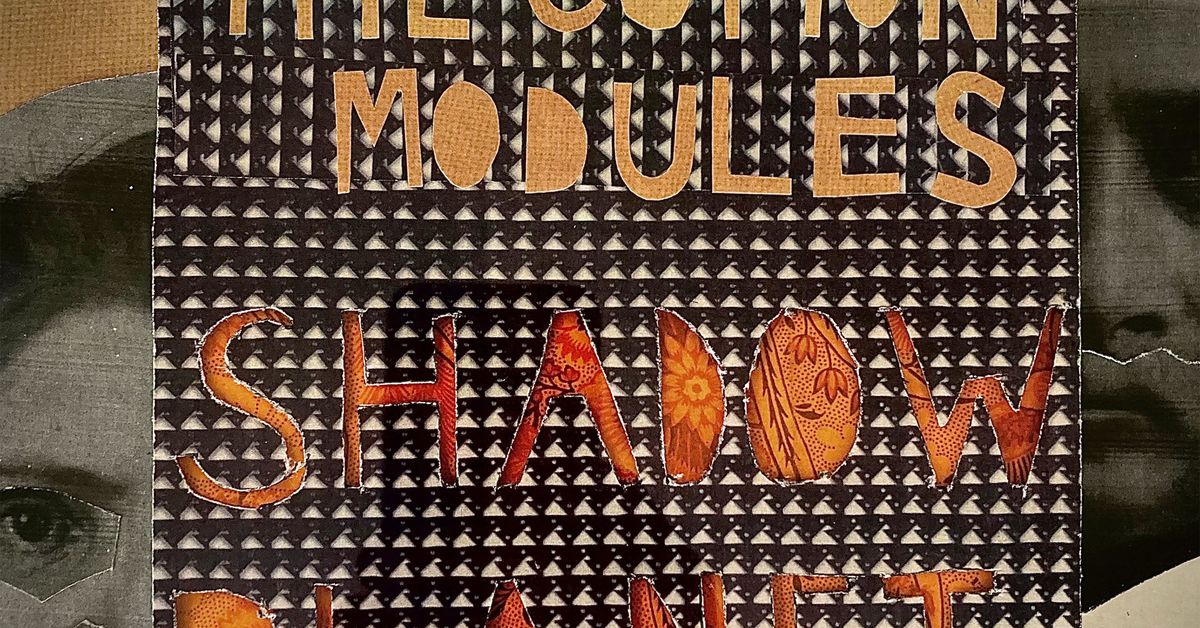

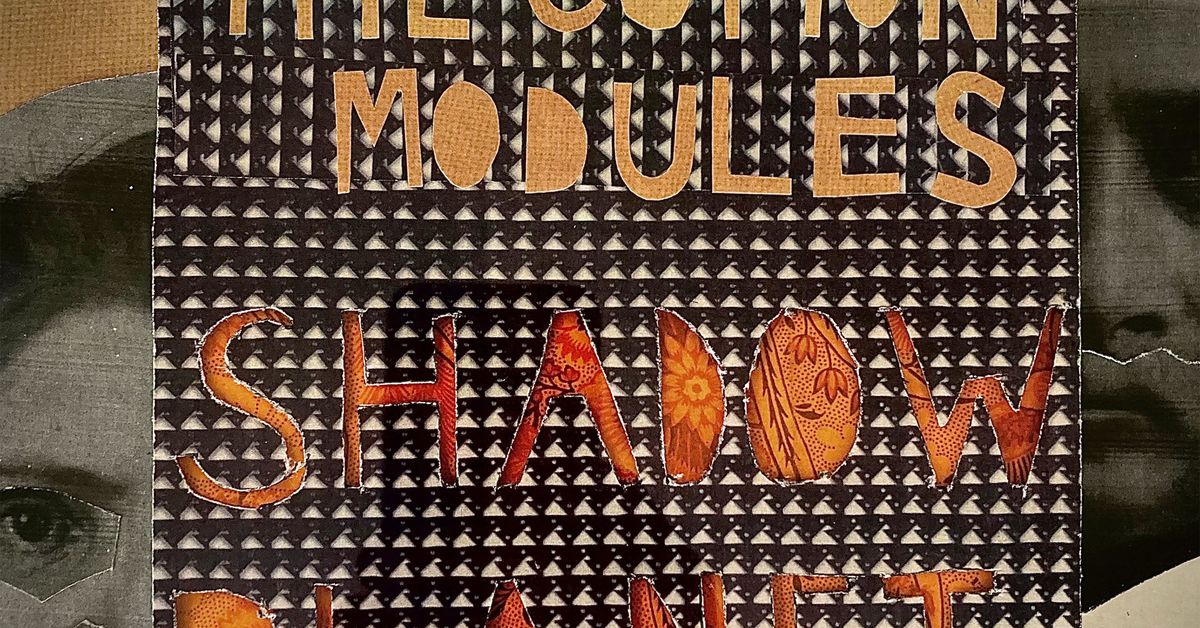

The fear is gone and the fun can now begin. This is how I think about creative endeavors that involve artificial intelligence. We have moved beyond the hyperbolic claims that AI renders human art obsolete and can now take advantage of all the potential this technology offers. Shadow Planet, a new album that was created as a collaboration between two humans (AI) shows just how much fun can be had.

Shadow Planet was created by Robin Sloan, Jesse Solomon Clark and Jukebox. Jukebox is an OpenAI machine-learning music program. Sloan and Clark had an informal conversation on Instagram about the possibility of starting a band, The Cotton Modules. They began to exchange music tapes. Clark, a seasoned composer, sent Sloan seeds of songs. Sloan then fed the songs into Jukebox. Jukebox is trained from 1.2 million songs and attempts to autocomplete all audio it hears. Sloan steered the AI program and then built upon Clark's ideas. Sloan returned his work to Sloan to continue to improve it.

OpenAIs Jukebox model uses 1.2 million songs as a basis to create its own music.

Shadow Planet is the result of this three-way deal. It's an atmospheric album that features snippets from folk songs and electronic hooks. These are like moss-covered logs emerging from a fuzzy bog full of ambient loops, disintegrating samples and ambient loops. This is an album that can be used as a standalone: it's a musical pocket universe.

Sloan explained that Shadow Planet's sound is in part due to the limitations of Jukebox. It only outputs mono audio at 44.11kHz. This type of AI model, he said, is absolutely an instrument that you should learn to play. It's basically a tuba. It is a very unusual and powerful tuba

This is what makes AI art so fascinating. It's the emergent creativity that machines and humans can respond to each other's programming limitations and advantages. Consider how the evolution from the harpsichord and piano influenced music styles. The ability to play loudly or lightly (rather than the fixed dynamics of the harpsichord), led to new musical genres. This is exactly what's happening with an entire range of AI models, which I believe, are shaping creative output.

Below is Sloan's interview. You can read it and see why machine learning feels like he was wandering through a labyrinth.

This interview was lightly edited to improve clarity

Robin, thank you for taking the time and talking to me about this album. Please tell me about the material Jesse sent you in order to begin this collaboration. Did it contain original songs?

Yes. Yes! Jesse is a filmmaker, commercial, and film composer. He also wrote the generative soundtrack for the Amazons Spheres visitor center in Seattle. He is used to sitting down and creating a variety of musical options. Each tape I received had about a dozen songlets, each one a little longer than 20-30 seconds in length. Others were a few minutes long and varied, separated by some silence. My first task was to listen to each tape, pick the best and then copy it to my computer.

Then you fed them into an AI system. Could you please tell me more about this program? It was what? How does it work?

OpenAIs Jukebox model was used. It was trained on approximately 1.2 million songs, 600K in English. It operates on raw audio samples. This is a big part of the appeal to me. I find the MIDI-centric AI system too polite. They don't respect the grid enough! Sample-based systems, which I have used in various forms, including to create music for my last novel, are more crunchier, and volatile. I prefer them.

My own code was used to sample the Jukebox model. OpenAI's publication describes the technique as "Hey Jukebox, play me a song like The Beatles". But I wanted to be able experiment with it. My sampling code allows me specify many artists and genres, and then interpolate them even though they don't have much in common.

It was slow and frustrating, but it was true

That's just the setup. Interactive sampling is a process. The model would start with a seed taken from one of Jesses tapes. This would give them a direction and a vibe. To the model, I would say: I'd like something that mixes genres X and Y. Sort of like artists A or B. But it also has to be consistent with this introduction.

__S.99__, __S.100__, __S.101__

__S.103__

__S.105__

__S.107__ ___________________________

__S.111__

__S.113__ and __S.114__

__S.116_______S.117______S.118_____S.119_____S.119_____S.119_____S.120_____S.121__

__S.123__ and __S.124__

__S.126____I would start with Jesse's phrase, run it through my sampling process, and then send it back. Hed add layers or extend it, and send it back. Then, I'd send it BACK through the sampling process to see what the human/AI breakdown is.____S.128__

One thing is clear: AI generates any sound that sounds human-like, regardless of whether it's enunciating words clearly or generating a lot of oohing and ahhing sounds.

__S.132__

__S.134______S.135_____S.136_____S.137__

__S.139__ _______________________________________________________

__S.143______S.144_____S.145______S.146__

__S.148__

__S.150__

__S.152__ __________________________________________________________________________________

__S.158__ and __S.159__

__S.161______S.162_____S.163_____S.164__