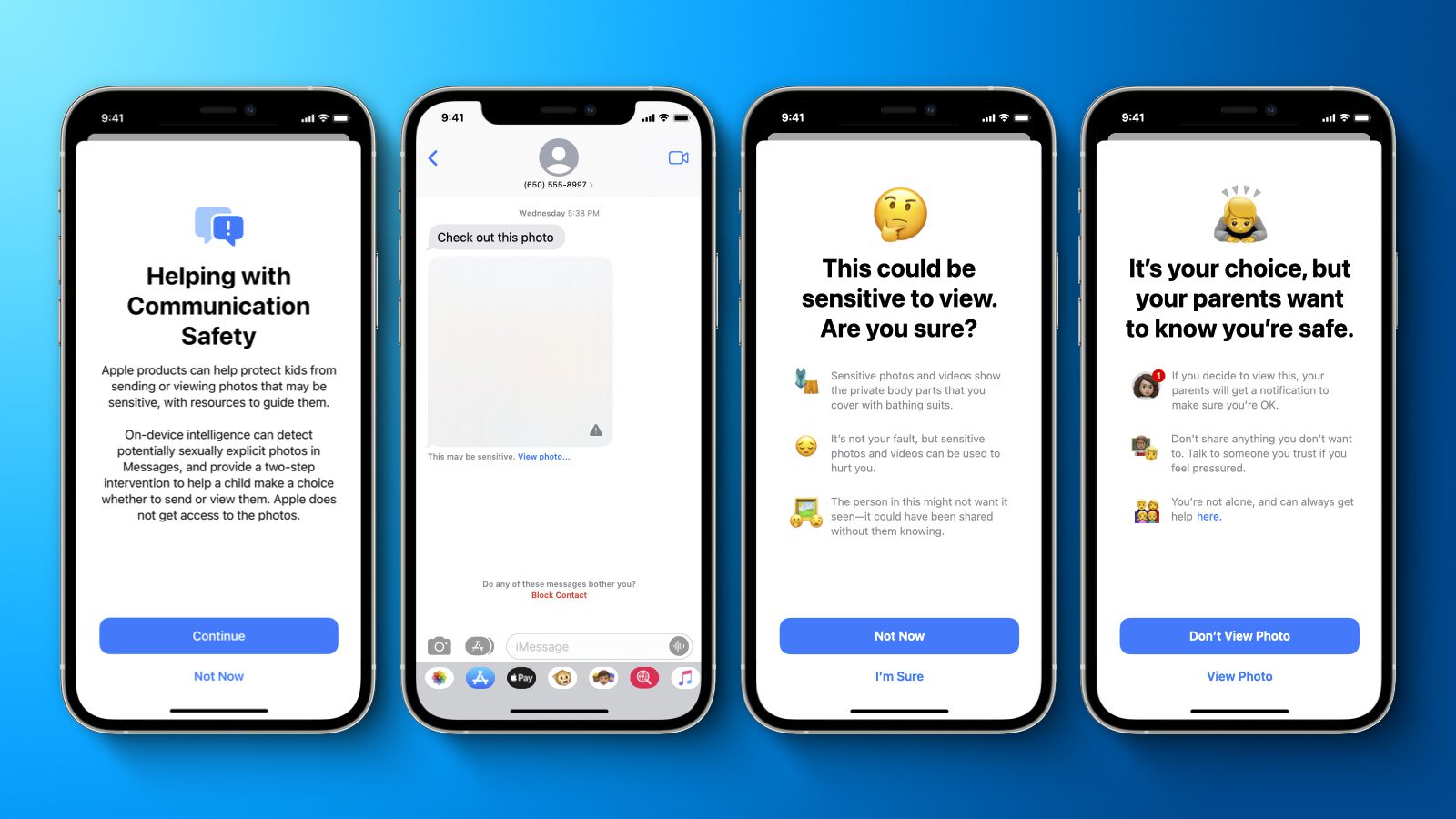

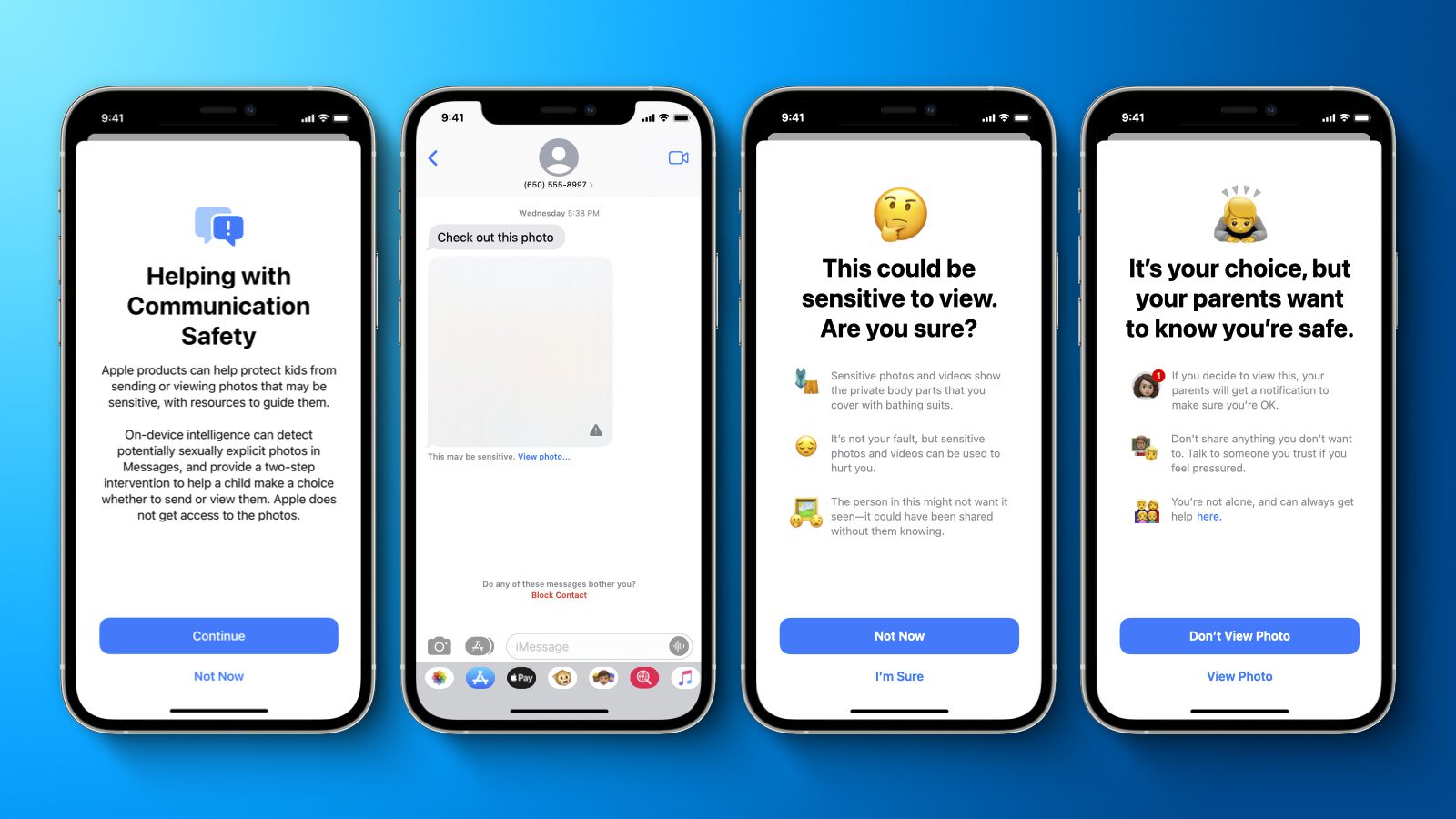

Apple showed off new child safety features last week. They will be available on the iPhone, iPad and Mac, along with software updates, later in the year. Apple stated that the features would be available only in the United States at launch.This is a refresher of our previous coverage on Apple's child safety features.The first is the optional Communication Safety feature that can be enabled in the Messages app for iPhone, iPad, Mac. This will warn parents and children when they receive or send explicit photos. Apple stated that the Messages app will use machine learning on the device to analyze attachments. If a photo is found to contain sexually explicit material, it will automatically blur the image and warn the child. Apple will also be able detect Child Sexual Abuse Material images (CSAM) stored in iCloud Photos. This will allow Apple to report the instances to the National Center for Missing and Exploited Children, a non-profit organization working in collaboration with U.S law enforcement agencies. Apple today confirmed that this process will only be applicable to photos uploaded to iCloud Photo and not videos. Apple will also expand guidance in Siri and Spotlight Search across all devices by offering additional resources to parents and children to keep them safe online and help them with unfavorable situations. Siri will direct users to the right resources to help them file a report on child exploitation or CSAM.Apple's announcement of the plans on Thursday has drawn some sharp criticism. Edward Snowden, NSA whistleblower, claimed that Apple is "rollingout mass surveillance". The Electronic Frontier Foundation, a non-profit organization, claims that the new child safety features will allow for a "backdoor" into its platforms."All it would require to broaden the narrow backdoor Apple is building is an extension of the machine learning parameters looking for additional content or a tweak to the configuration flags scan, not only children's but everyone's accounts," wrote India McKinney of EFF. It's not a slippery slope. This is a system that has been fully developed and just needs external pressure to make any changes.Apple's "privacy-invasive technology scanning technology" has been condemned by over 7,000 people. This open letter calls on Apple to end its child safety features.Apple has not yet responded to any negative feedback. Apple has not changed the date for the new child safety features. We confirmed this today with Apple. This is in the update to iOS 15, iPadOS 15 and watchOS 8. The plans for the new features are not likely to be launched until several weeks or months later, but they could change.Apple sticking with its plans will please many advocates, including Julie Cordua (CEO of Thorn, an international anti-human trafficking organisation).Cordua stated that Apple's commitment to deploying technology solutions that balance privacy and digital safety for children makes it possible to bring justice to survivors whose most traumatizing moments have been disseminated online.Stephen Balkam CEO of Family Online Safety Institute said, "We support Apple's continued evolution in child online safety." "Given the difficulties parents face when protecting their children online, it is vital that tech companies constantly improve their safety tools in order to address new risks and actual harms."