More than once this year, the experts have said to slow down. A new paper or discovery in the field of artificial intelligence would make the understanding obsolete.

When it comes to generative artificial intelligence that can produce creative works made up of text, images, audio, and video, we hit the knee of the curve in 2022. Millions of people were able to try out deep-learning technology for the first time this year as a result of its emergence from a decade of research. Artificial intelligence created controversy and turned heads.

There were seven big artificial intelligence news stories of the year. We would still be writing about this year's events even after we cut it off somewhere.

DALL-E 2 is a deep learning model that can generate images from text. Trained on hundreds of millions of images pulled from the Internet, DALL-E 2 knew how to combine them.

AdvertisementImages of astronauts on horseback, teddy bears in ancient Egypt, and other works were soon filled with images on the social media site. The last time we heard about DALL-E was a year ago when version 1 of the model had trouble rendering a low-resolution chair.

200 people were allowed to use DALL-E2 at first. Content filters are used to block violent and sexual content. DALL-E 2 became available for everyone in late September after over a million people were allowed into a closed trial. There was another contender that had risen by that time.

In early July, the Washington Post broke the news that a Google engineer namedBlake Lemoine was put on paid leave because he believed that the language model for dialogue applications was sentient and deserved the same rights as a human.

Lemoine started chatting with LaMDA about religion and philosophy while he was working for Responsible Artificial Intelligence. Lemoine said that he knows a person when he talks to them. Whether they have a brain made of meat or not, it doesn't matter. Or if they have a lot of code. I chat with them. I listen to what they have to say, and that's how I decide if I'm a person or not.

AdvertisementLemoine was only being told what he wanted to hear by LaMDA. The LaMDA was trained on millions of books and websites. It predicted the most likely words that would follow after Lemoine's input.

Lemoine told others about the work of his group. In July, Lemoine was fired by Google. He was not the first person to get caught up in the hype over an artificial intelligence model.

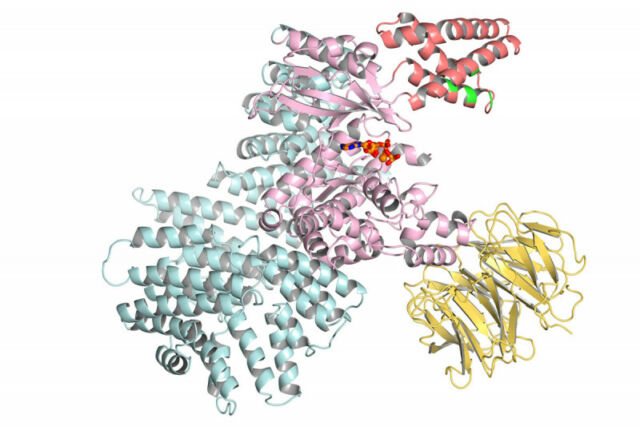

In July, DeepMind announced that its AlphaFold artificial intelligence model had predicted the shape of almost all the known genes of organisms with a genomes. AlphaFold had previously predicted the shape of all human proteins. The database contained over 200 million structures.

Researchers from all over the world were able to access the data for research related to medicine and biological science thanks to DeepMind.

Scientists can control or modify the shapes of the basic building blocks of life with knowledge of their shapes. It's especially useful when developing new drugs. Janet Thornton said that "almost every drug that has come to market over the past few years has been designed partly through knowledge ofprotein structures." Knowing all of them makes sense.