3D model generators could be the next breakthrough in the field of artificial intelligence. Point-E is a machine learning system that can create a 3D object. A paper published alongside the code base claims that Point-E can produce 3D models in less than two minutes.

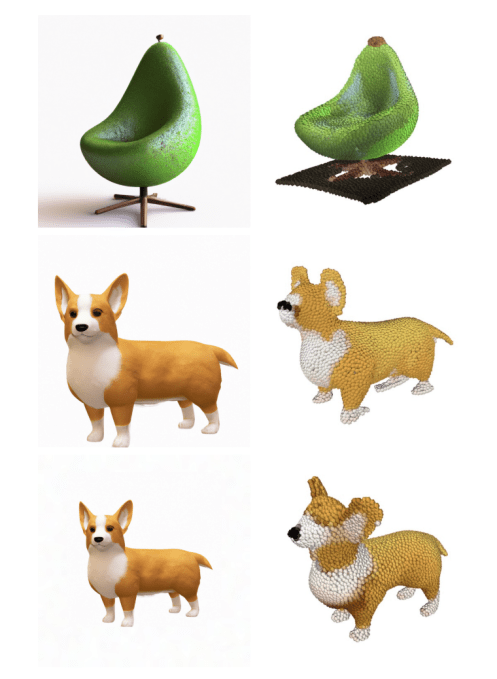

3D objects are not created in the traditional way. Point clouds are data points in space that represent a 3D shape. Point-E is short for efficiency, because it is supposedly quicker than previous 3D object generation approaches. A key limitation of Point-E is that they don't capture an object's fine-grained shape or texture.

The Point-E team trained an additional system to convert Point-E's point clouds to meshes. 3D modeling and design use meshes to define objects. They note in the paper that the model can sometimes miss certain parts of objects.

The image is from Openai.

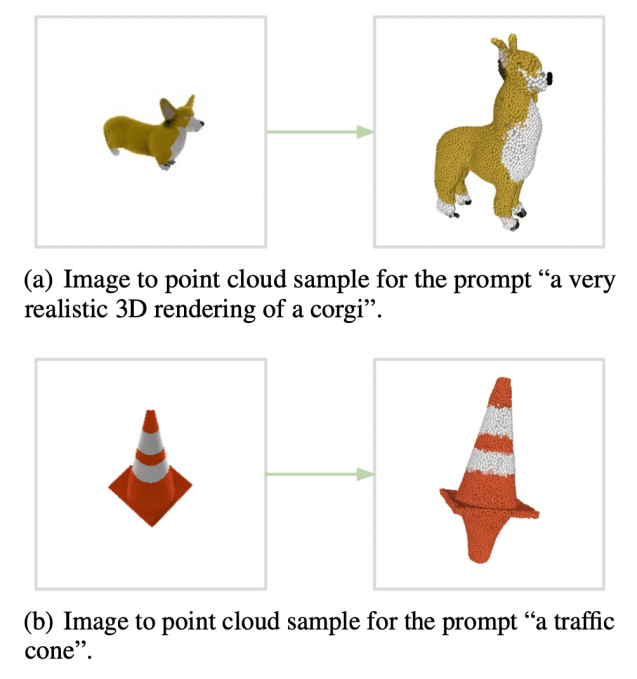

A text-to-image model and an image-to-3D model are included in Point-E. The text-to-image model was trained on labeled images to understand the associations between words and visual concepts. The image-to-3D model was fed a set of images with 3D objects so that it could translate between them.

Point-E's text-to-image model creates a synthetic rendered object that's fed to the image-to-3D model.

Point-E could produce colored point clouds when trained on a data set of millions of 3D objects. Point-E's image-to 3D model sometimes fails to understand the image from the text-to-image model, resulting in a shape that doesn't match the text prompt. It is orders of magnitude faster than the previous state-of-the-art according to the Openai team.

The point clouds are converted into meshes. The image is from Openai.

They wrote in the paper that their method produced samples in a small fraction of the time. It could allow for the discovery of higher-quality 3D object or make it more practical.

The applications are what they are. 3D printing could be used to make real-world objects from Point-E's point clouds. Once it is a little more polished, the system can be used in game and animation development.

It isn't the first company to jump into the 3D object generator DreamFusion, an expanded version of Dream Fields, was released earlier this year. DreamFusion can generate 3D representations of objects without 3D data.

While all eyes are on 2D art generators, model-synthesizing artificial intelligence could be the next disruptor. 3D models are used in many fields. Engineers use models as designs of new devices, vehicles and structures while architectural firms use them to demo proposed buildings.

There are point-E failures. The image is from Openai.

It usually takes several hours to craft a 3D model. If the kinks are eventually worked out, Point-E could be a decent profit for Openai.

What type of intellectual property disputes might occur in the future? There is a large market for 3D models with several online marketplaces that allow artists to sell their work. If Point-E catches on and its models make their way onto the marketplaces, model artists might protest, pointing to evidence that modern generative artificial intelligence borrows a lot from its training data. Unlike DALL-E 2, Point-E doesn't credit any of the artists that influenced it.

That issue will be left for another day by Openai. The Point-E paper doesn't mention anything about copyrighted works.

The researchers expect Point-E to suffer from biases from the training data and a lack of safeguards around models that could be used to create dangerous objects. They hope that Point-E will inspire further work in the field of text-to 3D synthesis.