Riffusion takes it to a new level with a clever, weird approach that produces weird and compelling music using not audio but images of audio.

It sounds weird. It works if it works. It works. It's kind of.

Over the last year, machine learning techniques have made a huge difference in the world of artificial intelligence. Stable Diffusion and DALL-E 2 are two models that work by gradually replacing visual noise with what the artificial intelligence thinks a prompt should look like.

The method is very susceptible to fine tuning, where you give the model a lot of a specific kind of content in order to have it specialize in producing more examples of that content. You could make it look better on watercolors or photos of cars, and it would be more able to reproduce those things.

Stable Diffusion was fine-tuned for their hobby project Riffusion.

We started the project because we love music and didn't know if it would be possible for Stable Diffusion to create a spectrogram image with enough fidelity to convert into audio One idea leads to the next as we have been more and more impressed by what is possible.

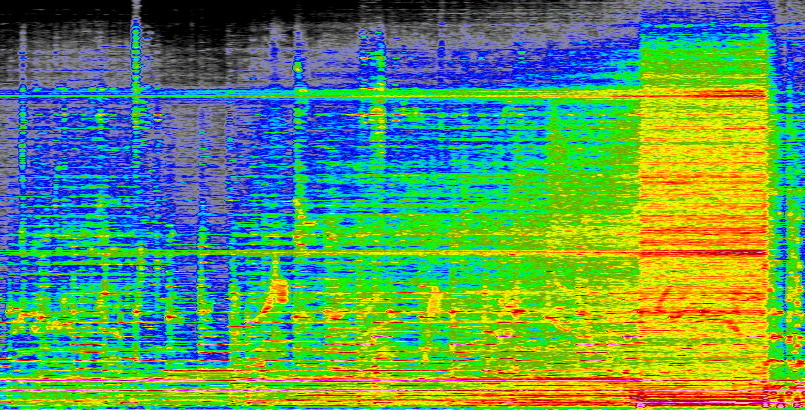

You want to know what are spectrograms? They show the amplitude of different frequencies. Imagine if the audio looked like a series of hills and valleys, instead of just total volume.

If you're wondering, this is part of a song I made.

Coldewey's image was used.

If you know what to look for, you can see how it gets louder as the song goes on. The process isn't perfect but it is an accurate representation of the sound You can do the same thing in reverse.

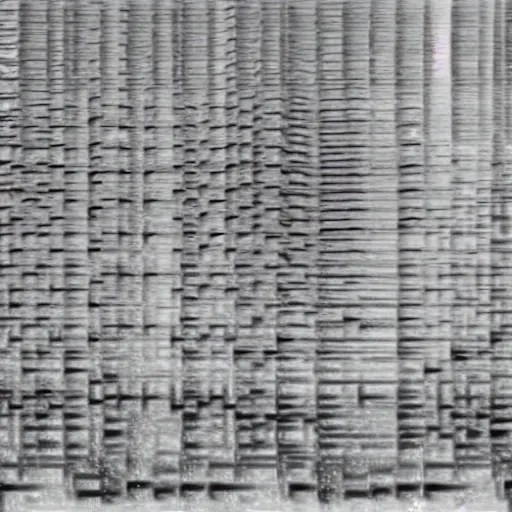

The resulting images were tagged with the terms "blues guitar," "jazz piano," and "afrobeat" Feeding the model gave it a good idea of what the collection would look like.

If you sample the process, you can see what it looks like.

The image was taken by Hayk Martiros.

When converted to sound, the model proved to be a pretty good match for things like jazz and piano. This is an example.

The image was taken by Hayk Martiros.

A three-minute song would be a much, much wider rectangles than a square spectrogram. No one wants to listen to music for more than five seconds at a time, but the limitations of the system they had created meant they couldn't just create a large scale spectrogram.

After trying a few things, they took advantage of the fundamental structure of Stable Diffusion, which has a lot of space. This is similar to the no-man's-land between more well-defined areas. Even though there is no dog or cat in the model, there is some kind of dog or cat between them, even though there is no actual dog or cat.

Latent space stuff gets more weird than that.

A terrifying AI-generated woman is lurking in the abyss of latent space

The Riffusion project has no nightmares. They found that if you have two prompts, like "church bells" and "electronic beats", you can step from one to the other a bit at a time.

They weren't sure that diffusion models could do this, so the facility with which this one turns bells into beats or typewriter taps into piano and bass is amazing.

Producing long-form clips is still a possibility.

Forsgren said that they haven't tried to create a classic 3-minute song with repeated chorus and verse. It could be done with a higher level model for song structure and a lower level model for individual clips. You could train our model with bigger images of full songs.

Where is it going? Other groups are trying to create artificial intelligence-generated music in a variety of ways.

AI music generators could be a boon for artists — but also problematic

According to Forsgren, Riffusion is more of a demo than a plan to change music.

We are excited to learn from the many directions we could take here. Other people are already building their own ideas on top of our code. The Stable Diffusion community is able to build on top of things that the original authors can't predict.

You can try it out in a live demo at Riffusion.com, but you might have to wait a bit for your clip to render, since this got more attention than the creators were expecting. If you have the chips for it, you can run your own as well.