Machine learning and artificial intelligence research is too much for anyone to read. The Perceptron column wants to collect some of the most relevant recent discoveries and papers and explain why they matter.

Two recent innovations from the depths of the company's research labs were described this month. Scientists at MIT are using acoustic information to help machines better envision their environments.

Meta's compression work doesn't get to unexplored territory. A neural audio codec was announced last year. Meta claims that it's the first to work for CD-quality, stereo audio, making it useful for commercial applications.

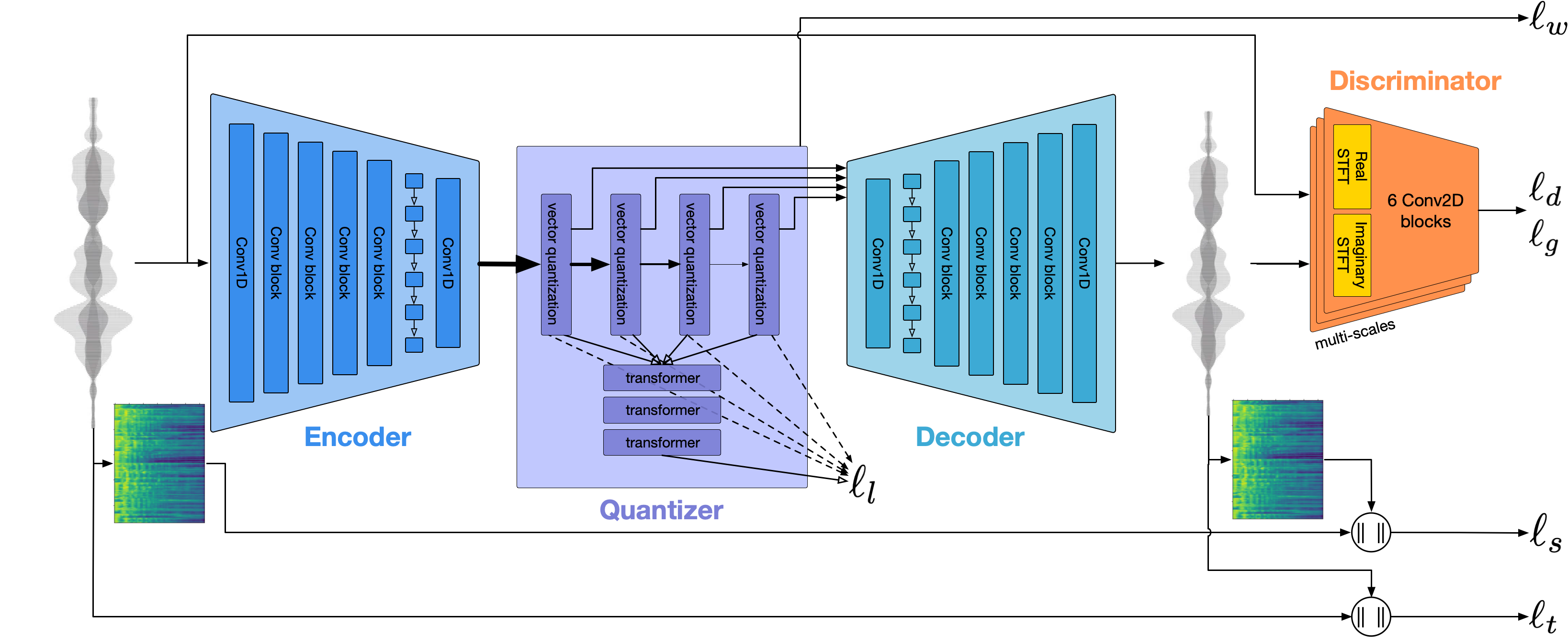

A drawing of an audio compression model. The image is called Meta.

Meta's compression system, called Encodec, can compress and decompress audio in real time on a singleCPU core at rates of 1.5kbps to 12kbps. Encodec can achieve a 10x compression rate at 64kbps, which is1-65561-65561-65561-65561-65561-65561-65561-65561-65561-6556 is1-65561-65561-65561-65561-6556 is1-65561-65561-65561-65561-65561-65561-65561-65561-65561-65561-65561-65561-65561-65561-65561-65561-65561-65561-65561-65561-65561-65561-65561-65561-6556

Encodec could eventually be used to deliver better-quality audio in situations where bandwidth is constrained or at a premium according to the researchers behind the project.

It has less commercial potential than Meta's work. It could be used to start important scientific research in biology.

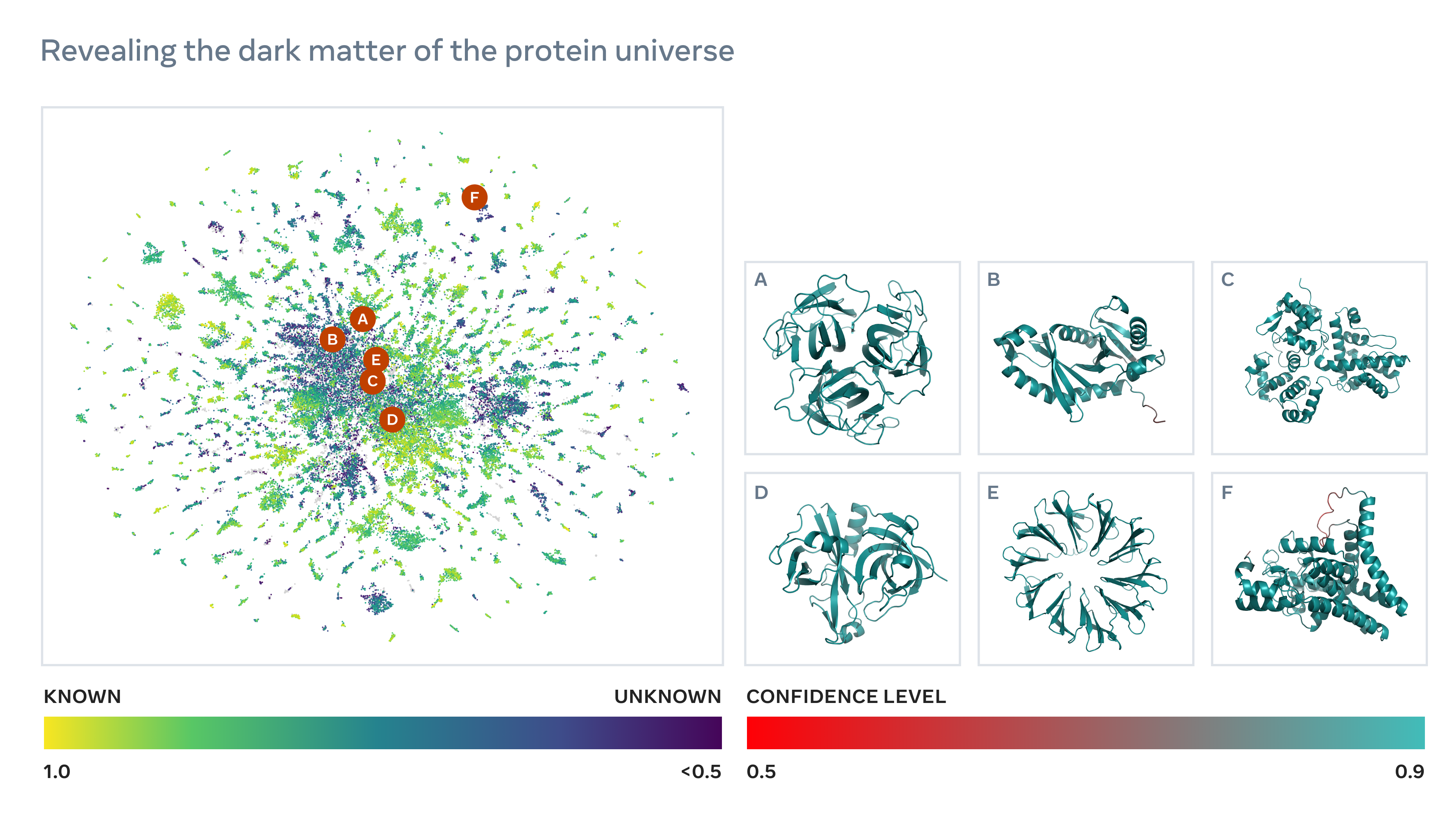

Meta's system predicts the structure of the human body. The image is called Meta.

Meta says its artificial intelligence system predicted the structures of hundreds of millions of organisms that have not yet been characterized. It is more than triple the 220 million structures that DeepMind was able to predict in the first half of the year.

Meta's system isn't as accurate as Deep Mind's. Only a third of the 600 million created were high quality. It is 60 times faster at predicting structures than it is at estimating them.

The company's artificial intelligence division this month detailed a system designed to reason. Researchers at the company say that their Neural Problem Solver learns from a dataset of successful mathematical proof to generalize to different kinds of problems.

Meta isn't the first to build such a system In February, Openai announced that it had developed a new product called Lean. DeepMind has been experimenting with systems that can solve difficult mathematical problems. According to Meta, its neural problem solvers was able to solve five times more International Math Olympiad than any previous artificial intelligence system.

The fields of software verification, cryptography and even aerospace could be improved by the use of math-solving artificial intelligence.

Research scientists at MIT developed a machine learning model that can be used to understand acoustics in a room. By modeling the acoustics, the system can learn a room's geometry from sound recordings.

The tech could be used to create virtual and augmented reality software that has to navigate complex environments. They plan to improve the system so that it can generalize to larger scenes, such as entire buildings or even whole towns and cities.

The rate at which a quadrupedal robot learns to walk is being accelerated by two separate teams. One team looked to combine the best of breed work out of numerous other advances in reinforcement learning to allow a robot to go from blank slate to robust walking in just 20 minutes.

It is possible for a quadrupedal robot to learn to walk from scratch with deepRL in less than 20 minutes, thanks to several careful design decisions. The researchers wrote that this does not require novel algorithmic components.

They combine some state-of- the-art approaches and get amazing results. The paper can be found here.

A robot dog is being demonstrated in Berkeley, California. The photo was taken by PhilippWu/Berkeley Engineering.

The project was described as training an imagination. The robot is set up with the ability to attempt predictions of how its actions will work out, and though it starts out helpless, it gains more knowledge about the world and how it works. This leads to a better prediction process, which leads to better knowledge, and so on until it walks in less than an hour. It learns quickly to recover from being pushed or else. This is the place where their work is documented.

Work with a potentially more immediate application came earlier this month out of Los Alamos National Laboratory, where researchers developed a machine learning technique to predict thefriction that occurs during earthquakes. The team was able to project the timing of a next earthquake using the statistical features of the signals from the fault.

Chris Johnson is one of the research leads on the project. Future predictions are beyond describing the instantaneous state of the system.

The image is called Dreamstime.

It is not clear whether there is enough data to train the forecasting system. Predicting damage to bridges and other structures is one of the applications that they are optimistic about.

MIT researchers warned last week that neural networks being used to mimic real neural networks should be carefully examined for training bias.

Neural networks are based on the way our brains process and send signals. Synthetic and real ones work differently. The MIT team found that neural network-based simulations of grid cells only produced the same activity when they were constrained to do so by their creators. The cells didn't produce the desired behavior if they were allowed to govern themselves.

Deep learning models are very useful in this domain. "They can be a powerful tool, but one has to be very circumspect in interpreting them and in determining whether they are truly making de novo predictions, or even shed light on what it is that."