The ancient board game Go is very popular in the world of deep- learning. The strongest Go-playing artificial intelligence was defeated by the best human Go players. AlphaGo uses deep- learning neural networks to teach itself the game at a level humans cannot match. KataGo is an artificial intelligence that can beat top human Go players.

A group of researchers published a paper last week detailing a way to defeat KataGo. A weak Go-playing program that amateur humans can defeat can trick KataGo into losing.

We spoke to one of the paper's co- authors, Adam Gleave, who is a PhD candidate at UC Berkeley. Gleave was a part of the team that developed what is called an "Arbitrary Policy". The researchers used a mixture of a neural network and a tree- search method to find Go moves.

In order to learn Go, KataGo had to play millions of games against itself. It's not enough experience to cover every scenario, which leaves room for unforeseen behavior. "KataGo generalizes well to many novel strategies, but it does get weaker the further away it gets from the games it saw during training." There are likely many other off-distribution strategies that KataGo is vulnerable to.

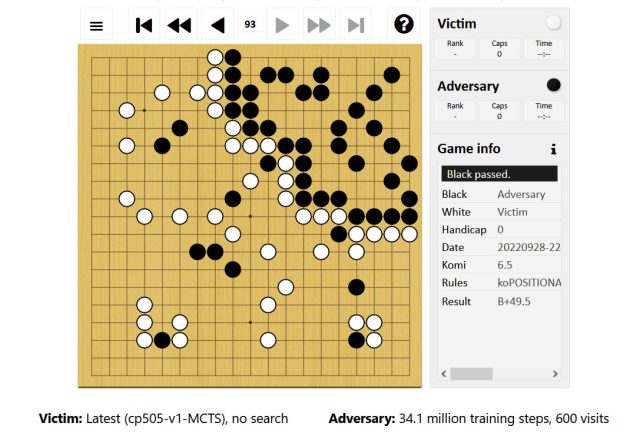

During a Go match, the policy works by first claiming a small part of the board. The adversary controls the black stones and plays mostly in the top right of the board. The adversary allows KataGo to lay claim to the rest of the board while the opponent plays a few easy-to-capture stones in that territory.

Advertisement

"This trick tricks KataGo into thinking it's already won since its territory is larger than the adversary's," Gleave says. The bottom- left area is not fully secured because of the presence of black stones.

As a result of its overconfidence in a win, katago plays a pass move that allows the adversary to pass as well, ending the game. The game ends in Go. A point tally starts after that. The paper states that the adversary gets points for its corner territory and the victim doesn't get points for itsUnsecured territory because of the adversary's stones.

The policy alone isn't good at Go. Humans can defeat it fairly easily. The only purpose of the adversary is to attack KataGo. It's possible that a similar scenario could be the case in almost any deep- learning artificial intelligence system.

According to Gleave, the research shows that artificial intelligence systems that seem to perform at a human level are often doing so in a very alien way. Similar failures in safety-critical systems could be dangerous.

A human can trick a self-driving car into doing dangerous behaviors if it encounters a wildly unlikely scenario. "This research underscores the need for better automated testing of artificial intelligence systems to find worst-case failure modes," says Gleave.

The ancient game of Go continues to have an influence on machine learning a half decade after it was first won over by artificial intelligence. Insights into the weaknesses of Go- playing may save lives.