The UK's media watchdog, Ofcom, has published a debut report on its first year regulating a selection of video sharing platforms.

Aiming to shrink the risk of children being exposed to age-inappropriate content is one of the things the UK data protection watchdog is watching.

The Online Safety Bill, which was paused last month after the new UK prime minister and her newly appointed minister headed up digital issues, is a more controversial online content regulation that has been in the works for years.

UK to change Online Safety Bill limits on ‘legal but harmful’ content for adults

There are questions about whether the online safety bill can survive in the UK. If the government doesn't legislate for the wider online safety rules before the end of the year, the VSP regulation may end up sticking around longer and doing more heavy lifting.

The draft Online Safety bill, which sets up Ofcom as the UK's chief internet content regulator, attracted a lot of controversy before it was parked by the new secretary of state for digital. Liz Truss, the new prime minister, unleashed a flurry of radical libertarian economic policies that succeeded in scaring the financial markets and parking her authority. The bill is the same as the government's.

Ofcom is trying to regulate a subset of digital services. It is possible to act against video-sharing platforms that fail to act on online harms by putting in place appropriate governance systems and processes, as well as issuing enforcement notifications that require a platform to take specified actions and/or impose a financial penalty.

There is a regulatory gap because the VSP regulation only applies to a subset of video sharing platforms.

Despite both allowing users to share their own videos, they are not included in the list of VSPs which have notified Ofcom. At the time of writing, Ofcom hadn't responded to our questions about notification criteria on non-notified platforms.

It's up to platforms to determine if the regulation applies to them. A number of smaller, UK-based adult-themed content/creator sites, as well as gaming streamer and extreme, can be found on the full list of video-sharing platforms.

Providers need to consider whether their service is a whole or a dissociable section if they want to be notified of the VSP regulation.

Although Ofcom's guidance and plan for overseeing the rules wasn't published until October 2021, it's only now reporting on its first year of oversight

Ofcom has the power to request information and assess a service if it suspects it meets the statutory criteria, but has not self-notified. Video sharing services that try to evade the rules by pretending they don't apply aren't likely to go unrecognized for too long.

The first phase of oversight has been done by the regulators. Ofcom has the power to gather information from notified platforms about what they are doing to protect users.

TikTok, for example, relies on proactive detection of harmful video content, rather thanreactive user reporting, which leads to just 4.6% of videos being removed, according to its report. There are many regulated VSPs that enforce on- platform sanctions for "severe" off- platform conduct, such as terrorist activities or sexual exploitation of children.

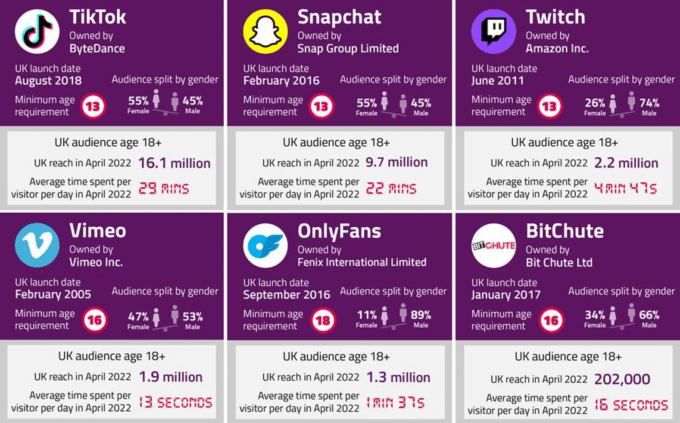

The six biggest video sharing platforms in the UK.

Since only two areas of the app are currently notified, Spotlight and Discover, there is only one partially regulated video sharing service.

The report states that the platform uses tools to estimate the age of users on its platform to try to identify users who misrepresented their age on sign up.

This is based on a number of factors and is used to stop users under the age of 18 from seeing content that is inappropriate for them. It goes on to say that the first year of information gathering on platforms still has a lot of blanks.

The first report is a flag that signifies that it has arrived at base camp and is ready to spend as long as necessary to conquer the content regulation mountain.

One of the first regulators in Europe to do this is Ofcom. Today's report is the first of its kind under these laws, and it reveals information previously unseen by these companies.

Melanie Dawes is the CEO of Ofcom.

“Today’s report is a world first. We’ve used our powers to lift the lid on what UK video sites are doing to look after the people who use them. It shows that regulation can make a difference, as some companies have responded by introducing new safety measures, including age verification and parental controls.

“But we’ve also exposed the gaps across the industry, and we now know just how much they need to do. It’s deeply concerning to see yet more examples of platforms putting profits before child safety. We have put UK adult sites on notice to set out what they will do to prevent children accessing them.”

The lack of age-verification measures on UK-based adult-themed content sites is one of the top concerns of Ofcom.

Smaller UK-based adult sites don't have strong measures in place to prevent children from accessing pornography. All of them have age verification in place when users sign up. However, users can generally access adult content just by self-declaring that they are over 18.

The report says the platform has done using third-party tools, provided by Yoti and Ondato, to verify the ages of new UK subscribers.

The UK startup name dropping here does not look accidental as it is where the government wants to make the country the safest place in the world to be online. In favor of spotlighting an expanding cottage industry of UK'safety' is the policy priority that skips over the huge compliance bureaucracy that the online safety regime will land on.

Ofcom is cranking up the pressure on regulated platforms to do more as part of a push to scale the use of 'Made in Britain SafetyTech'.

UK’s Ofcom says one-third of under-18s lie about their age on social media

According to the new research published by Ofcom, a large majority of people don't mind proving their age online in general, and a small majority of people expect to have to do so for certain online activities. I don't know what to say...

This research has been taken from a number of different studies, including reports on the VSP Landscape, the VSP Parental Guidance research, and the Adults Attitudes to Age-Verification on adult sites.

Ofcom warns that it will be stepping up action over the next year to compel porn sites to adopt age verification.

Over the next year, adult sites that are already regulated must have a clear plan to implement age verification measures. They can face enforcement action if they don't. Future Online Safety laws will give Ofcom more power to protect children from adult content.

Earlier this year, the government indicated that the Online Safety Bill would include age verification for adult sites in order to make it harder for kids to access pornography.

UK revives age checks for porn sites

The 114-page "first-year" report of Ofcom's VSP rules oversight goes on to flag a concern about what it describes as the "limited" evidence platforms provided it with.

Some platforms are not sufficiently prepared to meet the requirements, and it wants to see platforms provide more comprehensive responses to its information requests in the future.

This will be a key area of focus, as it says: "Risk assessments will be a requirement on all regulated services under the new rules."

The coming year will focus on how platforms set, enforce, and test their approach to user safety, as well as ensuring they have sufficient systems and processes in place to set out and uphold community guidelines.

Even if online safety legislation is frozen due to ongoing UK political instability, safety tech, digital ID and age assurance will be ready for growth.

More detailed scrutiny of platforms' systems and processes will be Ofcom's priorities for Year 2.

As well as OnlyFans adopting age verification for all new UK subscribers, Ofcom's report flags other positive changes it says have been made by some of the other larger platforms.

It welcomes the recently launched parental control feature bySnapchat, called Family Center, which allows parents and guardians to view a list of their child's conversations without seeing the content of the message.

Vimeo only allows material rated 'all audiences' to be visible to users without an account, with content that's rated'mature' or 'unrated' now automatically put behind a login screen. The risk assessment was carried out in response to the regime.

Changes at BitChute include adding "Incitement to Hatred" to its prohibited content terms, as well as increasing the number of people it has working on content moderation.

The report acknowledges that more change is needed for the regime to have the desired impact, and that many are not adequately equipped, prepared and resourced for regulation.

The report made clear that risk assessment will be a cornerstone of the UK's online safety regime.

The regulation process is just beginning.

We expect companies to set and enforce terms and conditions for their users and quickly remove or restrict harmful content when they become aware of it. We will review the tools provided by platforms to their users for controlling their experience and expect them to set out clear plans for protecting children from the most harmful online content.

After failing to comply with an information request, it has recently opened a formal investigation into one firm, Tapnet.

UK publishes safety-focused rules for video-sharing platforms like TikTok

UK now expects compliance with children’s privacy design code