Machine learning has gotten faster because matrix multiplication is at the center of it. Last week, DeepMind announced it had found a more efficient way to perform matrix multiplication. Two Austrian researchers claim they beat the previous record by one step.

Speech recognition, image recognition, smartphone image processing, compression, and generating computer graphics are some of the areas where matrix multiplication is used. Due to their parallel nature, graphics processing units are excellent at matrix multiplication. A big matrix math problem can be diced into many pieces and attacked with a special formula.

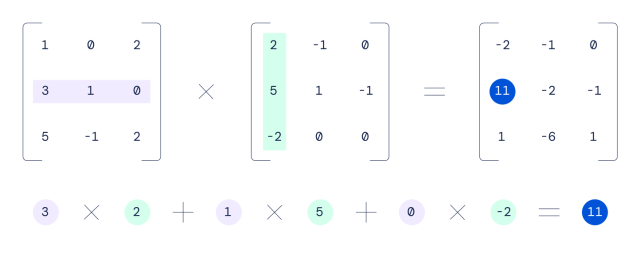

The previous best formula for multiplication of 44 matrices was discovered in 1969 by a German mathematician. The traditional schoolroom method would take 64 multiplications for the multiplication of two 44 matrices, while the Strassen's method can do it in 49 multiplications.

A paper about DeepMind's achievement of reducing that count to 47 multiplications was published in Nature last week.

Going from 49 steps to 47 doesn't sound like much, but when you consider how many trillions of matrix calculations take place in aGPU every day, evenIncremental improvements can translate into large efficiency gains.

Advertisement

The descendant of AlphaGo and AlphaZero is Alpha Tensor. Alpha Tensor is the first artificial intelligence system that can be used to discover novel, efficient and provably correct algorithms for fundamental tasks.

The problem was set up like a game. Last week, the company wrote about the process in more detail.

In this game, the board is a three-dimensional tensor (array of numbers), capturing how far from correct the current algorithm is. Through a set of allowed moves, corresponding to algorithm instructions, the player attempts to modify the tensor and zero out its entries. When the player manages to do so, this results in a provably correct matrix multiplication algorithm for any pair of matrices, and its efficiency is captured by the number of steps taken to zero out the tensor.

Alpha Tensor was trained by DeepMind to play a fictional math game, similar to how AlphaGo was trained to play Go. According to DeepMind, it rediscovered Strassen's work and those of other human mathematicians.

A new way to perform 55 matrix multiplication in 96 steps was discovered by Alpha Tensor. The paper by Kauers and Moosbauer claimed that they had reduced that count by one. It's no coincidence that the new algorithm was built off of DeepMind's work. Kauers and Moosbauer wrote that the solution was obtained from the scheme of the researchers at DeepMind.

It's possible that other longstanding math records will fall soon because of tech progress. Similar to how computer-aided design allowed for the development of more complex and faster computers, artificial intelligence could help human engineers speed up their own deployment.