Machine learning and artificial intelligence research is too much for anyone to read. The Perceptron column wants to collect some of the most relevant recent discoveries and papers and explain why they matter.

The projects that caught our attention over the past few weeks were: an earable that uses sonar to read facial expressions, and a project that uses a device called an earable. ProcthOR is a framework from the Allen Institute for Artificial Intelligence that can be used to train real-worldrobots. Meta created an artificial intelligence system that can predict the structure of a molecule. Researchers at MIT developed new hardware that they claim is more energy efficient.

The Earable looks like a pair of bulky headphones. The speakers emit acoustic signals to the side of the wearer's face, while a microphone picks up the faintest echoes created by the nose, lips, eyes, and other facial features. The earable can be used to capture movements like eyebrows raising and eyes darting.

![]()

The image was created by Cornell.

The earable doesn't have everything. It only lasts three hours on the battery and has to be done with a phone. The researchers say that it is a much sleeker experience than the recorders used in animation for movies, TV, and video games. For the mystery game L.A. Noire, a rig with 32 cameras was built.

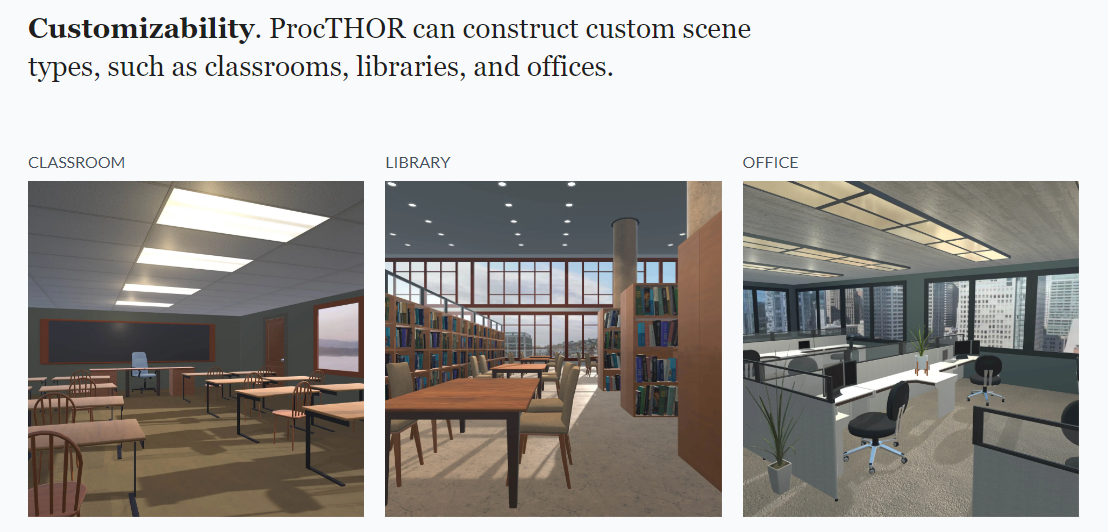

It is possible that Cornell's earable will be used to create animations for humanoidrobots. The robot will have to learn how to navigate a room. Fortunately, ProcTHOR takes a step in this direction, creating thousands of custom scenes including classrooms, libraries, and offices in which simulations can complete tasks such as picking up objects and moving around furniture.

The idea behind the scenes is to expose the simulations to as many different objects as possible. Performance in simulations can improve the performance of real-world systems, which is a well-established theory in the field of artificial intelligence.

The Allen Institute for Artificial Intelligence has images.

Scaling the number of training environments improves performance according to a paper. That is good news for robot bound for homes, workplace, and other places.

It takes a lot of compute power to train these systems. That may not be the case for a long time. Researchers at MIT have created an "Analog" processor that can be used to create networks of "neurons" and "Synapses", which in turn can be used to perform tasks such as recognizing images, translation, and more.

The researchers have a processor that uses a series of transistors. The electrical conductance of the resistors can be increased and decreased to mimic the strength and weakness of the brain's synaptic connections.

The movement of protons is regulated by an electrolyte. The conductance goes up when more protons are pushed. Conductance goes down when protons are removed.

The circuit board has a processor on it.

The MIT team's processor is extremely fast due to the fact that it contains nanometer-sized pores that provide the perfect paths for the transfer of information. The glass can run at room temperature and it isn't damaged by the proteins as they move along the pores.

According to the press release, the author said that once you have an analog processor, you will no longer be training networks other than your own. You will be training networks that are so complex that no one else will be able to match them. This is not a fast car and it is not a spaceship.

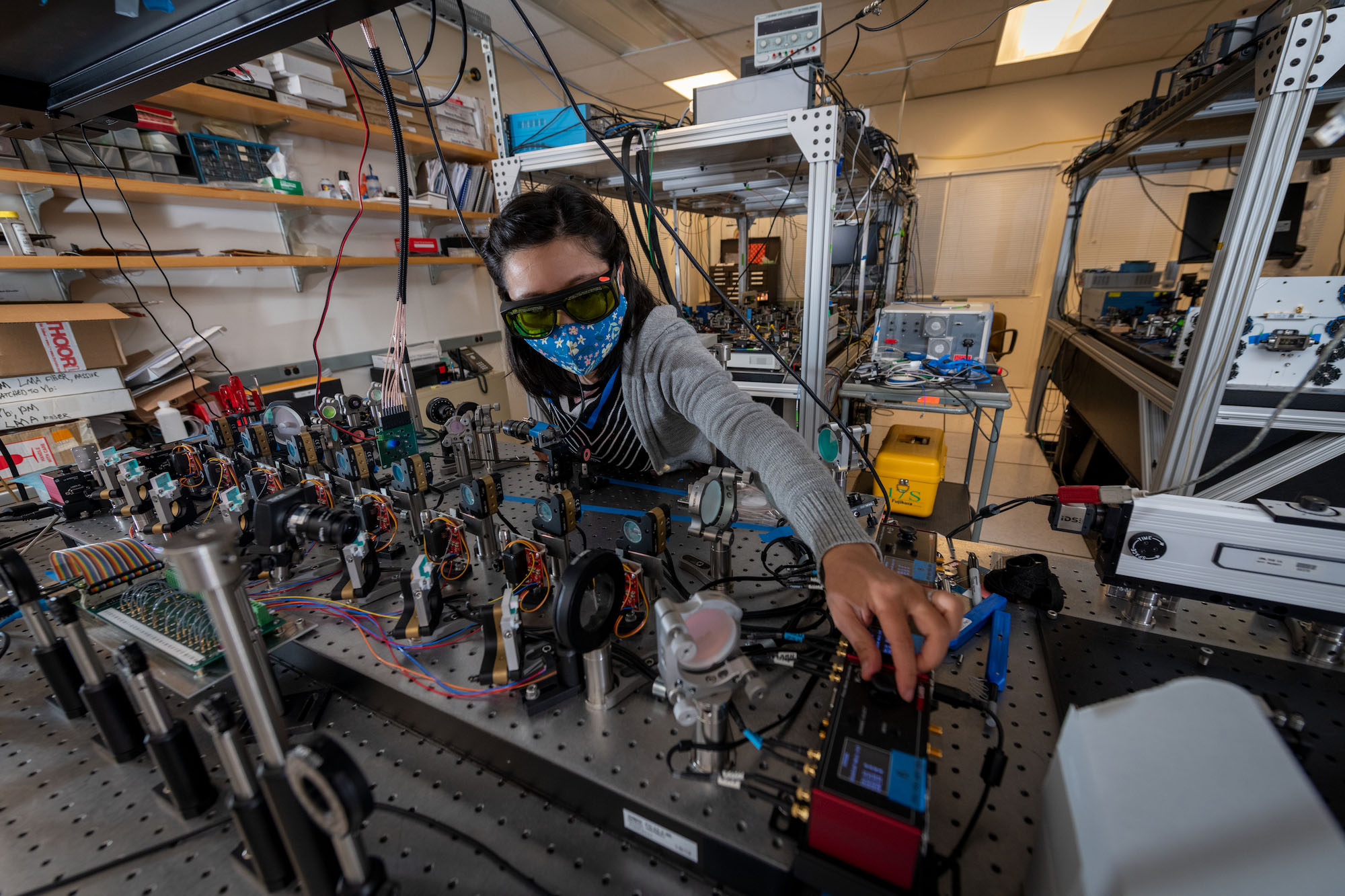

Machine learning is being used to manage particle accelerators. Two teams at Lawrence Berkeley National Lab have shown that simulation of the full machine and beam can give them a highly precise prediction.

The image is from the Berkeley lab.

The lab's Daniele Filippetto said that if you can predict the beam properties with an accuracy that surpasses their fluctuations, you can use that to increase the performance of the accelerators. It's no small feat to mimic all the physics and equipment involved, but the various teams' early efforts to do so yielded promising results

At Oak Ridge National Lab, an artificial intelligence-powered platform is allowing them to do hyperspectral computed tomography.

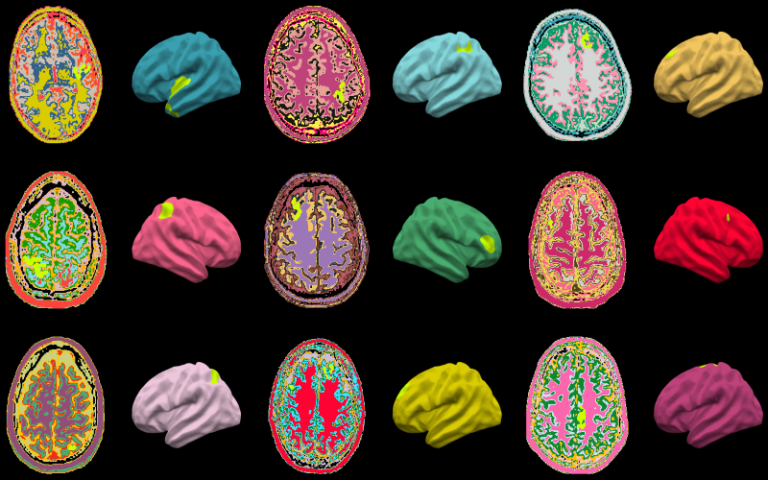

There is a new application of machine learning-based image analysis in the field of neurology, where researchers at University College London have trained a model to detect early signs of neurological disorders.

The brains used to be scanned.

A focal cortical dysplasia, a region of the brain that has developed abnormal but for whatever reason doesn't appear abnormal in magnetic resonance, is one of the most common causes of drug- resistant seizure. Thousands of examples of healthy and FCD-Affected brain regions were used to train a model to detect it.

The model was able to detect two thirds of the FCDs that were shown to it. There were 178 cases where doctors were unable to locate an FCD. The final say goes to the specialists, but a computer hint that something might be wrong can sometimes be all it takes to find out.

The aim was to create an artificial intelligence that could help doctors make better decisions. It was important to show doctors how the MELD algorithm made predictions.