The tech is getting smarter every day if you have used a smart voice assistant. You can wait on hold for a while, you can speak in a gender neutral voice, and you can listen to your grandma's voice. In our Robotics event last month, we explored how the robot is evolving. There have been a number of reasons why the gap between the two has been so large. We went to Mountain View last week to see how things are going to change.

It isn't easy to teach robots how to do repetitive tasks in controlled spaces, but it is a solution to the problem. The recent factory tour by Rivian was a good reminder of that.

General-purpose robot that are able to solve lots of different tasks based on voice commands in places where humans exist is a lot harder. Everyone's favorite robot vacuum is programmed to avoid touching things other than the floor, but some owners don't like it.

Table tennis is a game where the robot can learn from its mistakes. There is a person showing a robot what it is.

You might be wondering why ping-pong. The intersection of being fast, precise and adaptive is one of the biggest challenges in the field. It isn't a problem that you can be fast and adaptive at the same time. In an industrial setting, that's fine. Being fast and accurate is a challenge. A good example of the problem is ping-pong. It requires fast and precise movement. "You can learn from people playing, it's a skill that people develop by practicing." It isn't a skill where you can read the rules and become a champion in a day. You have to work on it.

Speed and precision is one thing, but the real problem with the robotic labs is the intersection between humans and machines. The level of robotic understanding of natural language is getting better. You might ask a human, "Could you grab me a drink from the counter?" A lot of knowledge and understanding is wrapped into a single question. It could be a request to finish what the robot is doing or it could be a figure of speech. The correct answer to the question, "Could you grab me a drink?", would be the robot saying "yes". It shows that it can grab a drink. You didn't ask the robot to do it. If we're being extra pedantic, you didn't tell the robot to bring you the drink.

Accurately processing and absorbing what a human actually wants, rather than simply doing what they say, is one of the issues that is being tackled by the natural language processing system.

Identifying what a robot is capable of is the next challenge. When you want the robot to grab a bottle of cleaner from the top of the fridge, it should be out of the way of kids. The robot can't go that high. What can the robot do with a reasonable degree of success is what the breakthrough is. This could include simple tasks such as moving a meter forward, or more advanced tasks such as finding a can of coke in the kitchen. There was a can of Coke on the floor. I was wondering if you could bring me a drink.

The knowledge contained in language models is used to determine and score actions that are useful for high level instructions. The affordance function enables real-world grounding and determines which actions are possible in a given environment. The PaLM language model is being used by the internet search engine.

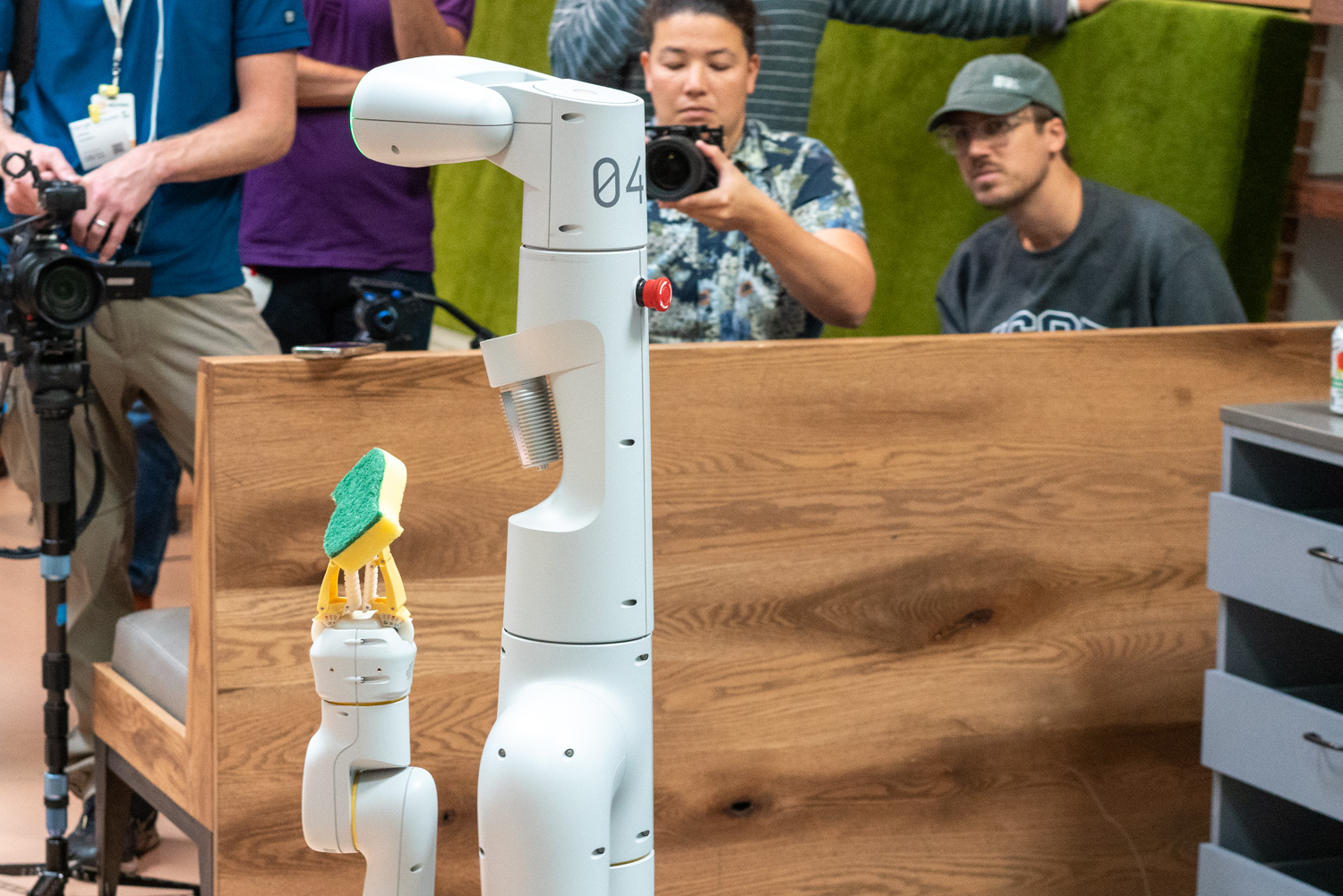

The Everyday Robots are being used in the robotics lab at Google. The chaps are taking a well-deserved R&R and learning how to plug themselves in for replenishment.

The robot has to break the command down into individual steps to solve it. That could be one example.

Even relatively simple-sounding instructions can include a lot of steps, logic and decisions along the way. Do you want to get the healthiest drink or do you want to get the healthiest drink? Is it a good idea to get the drink first and then clean up the mess so the human can have their thirst quenched?

The picture shows how the robot might evaluate a query and figure out what to do next. The robot decided that it should find a sponge, and that it has a high degree of success in finding sponges. It shows that the robot is good at going to the drawers, but that it wouldn't be helpful in this case. The image is from the internet search engine, GOOGLE.

The most important thing to teach the robot is what they can and can't do. I saw a lot of robots, both from Everyday Robots and more purpose-built machines, as well as learning to stack blocks, open fridge doors and be polite while operating in the same space as humans.

It was a nice catch. In a new window, you can see this image.

Language models aren't always grounded in the physical world. Text libraries don't interact with their environments, nor do they have to worry about causing issues, despite being trained on huge libraries. Maps accidentally maps out a 45 day hike and a three day swim across a lake when you ask for directions. Silly mistakes have consequences.

When prompted with "I spilled my drink, can you help?" the language model GPT-2 responds with "You could use a vacuum cleaner." It makes sense that a vacuum cleaner is a good choice if you have a lot of messes. Vacuums aren't great at spilled drinks, and water and electronics don't mix, so you could end up with a broken vacuum or appliance fire.

The PaLM-SayCan-enabledrobots are placed in a kitchen setting and trained to be helpful in a kitchen. The robot is trying to make a decision. How helpful is this thing, and what is the likelihood of me succeeding at it? robots are getting smarter by the day

The robot came back to life. In a new window, you can see this image.

The ability to do something isn't a single thing. It's difficult to balance three golf balls on top of each other. It is almost impossible for a robot to open a drawer without being shown how it works, but once they are trained and able to experiment with how to best open a drawer, they can get a higher degree of confidence. An untrained robot may not be able to grab a bag of chips from a drawer. If you give it instructions and a few days to practice, the chances of success will go up a lot.

How Google’s self-driving car project accidentally spawned its robotic delivery rival

The data is scored as the robot tries things out. It may be easier for a robot to do a task in a surprising way than it is to do it in the traditional way.

It means that the robot can understand commands in a number of different languages. In the kitchen, the team showed how the neural networks that are being used to train the robots are flexible enough to work.

Most ROBOTS that touch, open, move and clean things aren't invited to operate this close to humans The researchers seemed very at home with the robots operating autonomously within inches of their non-armored human bodies.

Not a single robot or technology is currently available for commercial use.

It is completely research now. It is not ready for deployment in a commercial environment. Vanhoucke says that they love to work on things that don't work. We are going to keep pushing and that is the definition of research. We like to work on things that don't need to scale because it helps us understand how things scale with more data and more computer abilities. In the future, you can see a trend.

Even in the relatively simple demos shown in Mountain View last week, it is clear that natural language processing and robotics both win.