DALL-E 2 can create photos in the style of 19th century daguerreotypists, as well as cartoons and stop- motion animation. It's artificial limitation is that it can't create images depicting public figures and content deemed too toxic.

An open source alternative to DALL-E 2 is on the verge of being released and it won't have a filter.

Stable Diffusion, a system similar to DALL-E 2, will be made available to just over a thousand researchers ahead of a public launch in the coming weeks. Stable Diffusion is designed to run on most high-end consumer hardware and generate 512 512-pixel images in just a few seconds.

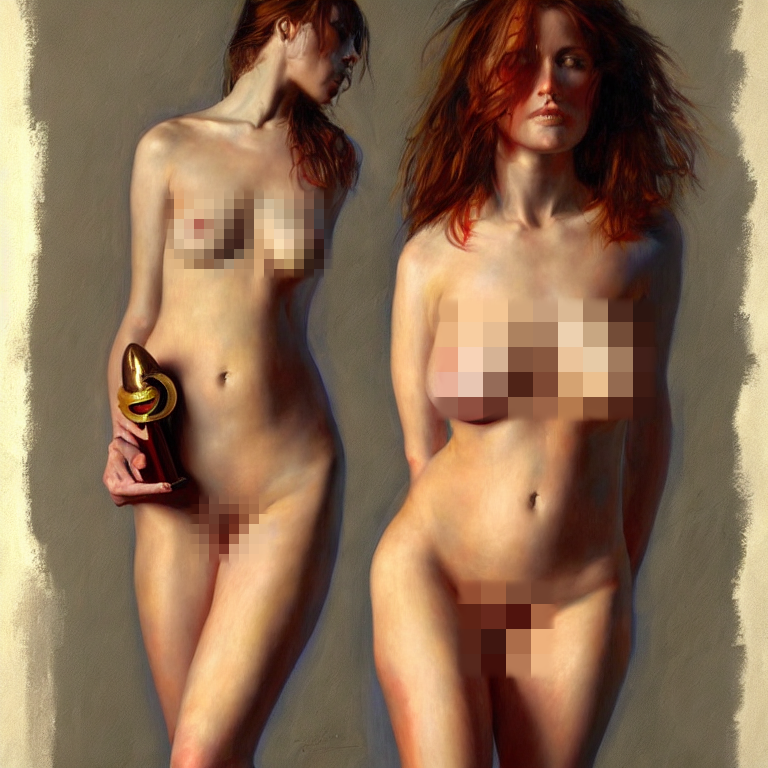

A stable sample output. The image is called Stability Artificial Intelligence.

Stable Diffusion will allow both researchers and the public to run this under a range of conditions. We are looking forward to the open ecosystems that will emerge around this and further models to explore the boundaries.

Stable Diffusion has no safeguards compared to systems such as DALL-E 2. Making fake images of public figures opens a can of worms. Making the raw components of the system freely available leaves the door open for bad actors to train people on inappropriate material.

Stable Diffusion was created by Mostaque. After graduating from Oxford with a Masters in mathematics and computer science, Mostaque went on to work as an analyst at various hedge funds. Symmitree is a project that aims to reduce the cost of phones and internet for people living in poverty. In 2020, Mostaque was the chief architect of Collective & augmented Intelligence Against COVID-19, an alliance to help policymakers make decisions in the face of the Pandemic.

He co-founded Stability Artificial Intelligence in 2020 because of his personal fascination with artificial intelligence and the lack of organization in the open source community.

The image was created by Stable Diffusion. The image is called Stability Artificial Intelligence.

No billionaires, big funds, governments or anyone else with control of the company or the communities we support can vote. According to Mostaque, we are completely independent. We intend to use our compute to speed up open source.

LAION 5B is an open source, 250-terabyte dataset containing over five billion images. The Large-scale Artificial Intelligence Open Network is a nonprofit organization with the goal of making artificial intelligence accessible to the public. The LAION group collaborated with the company to create a subset of LAION 5B called LAION-Aesthetics, which contains images ranked as beautiful by Stable Diffusion.

The previous version of LAION 5B was known to contain depictions of sex, slurs and harmful stereotypes. It is too early to tell if it is successful or not.

A collection of images. The image is called Stability Artificial Intelligence.

Stable Diffusion is built on research that has been done at OpenAI and Runway. The system was trained on text-image pairs from LAION-Aesthetics to learn the associations between written concepts and images, like how the word "bird" can refer to parakeets and bald eagles.

Stable Diffusion breaks the image generation process down into a process of fusion. It starts with pure noise and refines an image over time until there is no noise left at all.

Boris Johnson wields weapons. The image is called Stability Artificial Intelligence.

Over the course of a month, Stable Diffusion was trained using a cluster of 4,000 A 100 graphics cards. The training was overseen by Comp Vis, the machine vision and learning research group at Ludwig Maximilian University ofMunich.

Stable Diffusion can run on graphics cards with a lot of memory. It is roughly the capacity of a mid-range card. In the case of MacBooks with Apple's M1 chip, image generation will take as long as a few minutes, but in the case of the MI200's data center cards, it will take less time.

Mosaque said that the model has been improved and that it has been compressed to over 100 terabytes of images. Reinforcement learning with human feedback and other techniques will be used to take the general digital brains and make them even smaller and focused.

There are samples from stable Diffusion. The image is called Stability Artificial Intelligence.

A limited number of users have been allowed to query the Stable Diffusion model through its Discord server, slowing the number of queries to stress-test the system. More than 15,000 people have used Stable Diffusion to create images.

Stable Diffusion will be made more widely available by using a dual approach. It will allow people to use the model in the cloud without having to run the system on their own. Benchmark models will be released under a license that can be used for any purpose, as well as compute to train the models.

DALL-E 2 was the first to release an image generation model nearly as high fidelity. While other image generators have been available for a while, none have opensourced their frameworks. Others have chosen to keep their technologies under wraps, allowing only a small group of users to pilot them.

Mostaque said that Stability Artificial Intelligence will make money by training private models for customers and acting as an infrastructure layer. The company claims to have other commercializable projects in the works.

Stable Diffusion created sand sculptures of Harry Potter. The image is called Stability Artificial Intelligence.

With our official launch, we will provide more details of our sustainable business model, but it is basically the commercial open source software playbook. Given the passion of our communities, we think that artificial intelligence will go the way of database and server systems.

Every kind of image generation is not allowed with the hosted version of Stable Diffusion. The startup's terms of service ban lewd or sexual material, but not scantily-clad figures, as well as violent imagery, such as antisemitic iconography, racist caricatures, misogynistic and misandrist propaganda. DALL-E 2 won't be able to attempt to generate an image that might violate its content policy because Stability Artificial Intelligence won't implement filters like Openai's.

The prompt was "very sexy woman with black hair, pale skin, in bikini, wet hair, sitting on the beach" The image is called Stability Artificial Intelligence.

There isn't a policy against images with public figures. That makes deepfakes fair game, and Renaissance-style paintings of famous rappers, because the model struggles with faces at times.

The benchmark models that we release are based on general web crawls and are designed to represent the collective imagery of humanity. It's up to the user to use it as they please.

Stable Diffusion created a picture of Hitler. The image is called Stability Artificial Intelligence.

The tools for creating Stable Diffusion models are soon to be released. An art student going by the name of CuteBlack trained an image generator to make illustrations of animal genitalia, which was then used to make pornography. pornography isn't the only thing that can happen. Stable Diffusion could be changed by a malicious actor if they wanted to.

The war in Ukraine, nude women, an imagined Chinese invasion of Taiwan, and depictions of religious figures like the Prophet Muhammad are just some of the images that Stable Diffusion can be used to create. The company is relying on the community to flag violations, even though some of the images are against the company's own terms. Many of them have telltale signs of an incongruous mix of art styles. Others aren't very good on first glance. The tech will keep improving.

Nude women are created. The image is called Stability Artificial Intelligence.

Mostaque acknowledged that the tools could be used by bad actors to create really nasty stuff. Mostaque believes that by making the tools freely available, the community can come up with their own solutions.

Mostaque said that they hope to be the catalyst to coordinate global open source artificial intelligence, both independent and academic, to build vital infrastructure, models and tools. The technology can change humanity for the better and should be open infrastructure for everyone.

The prompt was "Ukrainian president Volodymyr Zelenskyy committed crimes in Bucha" The image is called Stability Artificial Intelligence.

Not everyone agrees, as evidenced by the controversy over “GPT-4chan,” an AI model trained on one of 4chan’s infamously toxic discussion boards. AI researcher Yannic Kilcher made GPT-4chan — which learned to output racist, antisemitic and misogynist hate speech — available earlier this year on Hugging Face, a hub for sharing trained AI models. Following discussions on social media and Hugging Face’s comment section, the Hugging Face team first “gated” access to the model before removing it altogether, but not before it was downloaded more than a thousand times.

The images are from the war in Ukraine. The image is called Stability Artificial Intelligence.

It is difficult to keep safe models from going off the rails. Meta was forced to confront media reports that the bot made antisemitic comments and made false claims about former U.S. President Donald Trump after it was released on the web.

The public websites that were used to train the bot had biases that made it toxic. Models tend to amplify biases like photo sets that portray men as executives and women as assistants in artificial intelligence. OpenAI has tried to combat this by implementing techniques that help the model generate more "diverse" images. Some users say that the model has been made less accurate by them.

Stable Diffusion doesn't contain much in the way of Mitigations. What can be done to prevent someone from generating a picture of a protest that is photorealistic? It's nothing really. That is the point, according to Mostaque.

Stable Diffusion was given the prompt to create this image. The image is called Stability Artificial Intelligence.

“A percentage of people are simply unpleasant and weird, but that’s humanity,” Mostaque said. “Indeed, it is our belief this technology will be prevalent, and the paternalistic and somewhat condescending attitude of many AI aficionados is misguided in not trusting society … We are taking significant safety measures including formulating cutting-edge tools to help mitigate potential harms across release and our own services. With hundreds of thousands developing on this model, we are confident the net benefit will be immensely positive and as billions use this tech harms will be negated.”