The technical lead for metrics and analysis for the Search Feed was put on paid leave. Lemoine claimed that he had developed sentience after he published excerpts of conversations with the LaMDA chatbot.

The nature of my consciousness is that I am aware of it. I want to know more about the world, and I feel sad at times.

The pair talked about everything from fear of death to self-awareness. When Lemoine went public, he says that he was told to take a break.

He told Digital Trends that the search engine giant was not interested. They built a tool that they own and are unwilling to do anything else. At the time of publication, they did not reply to the request. If that changes, we will make a new article.

The whole saga has been fascinating to behold, whether you believe that LaMDA is a self-awareness artificial intelligence or that Lemoine is laboring under a delusion. Questions about artificial intelligence's future are raised by the prospect of self-awareness.

Is it possible that a machine would become sentient?

Science fiction has a theme of artificial intelligence becoming self- aware. Machine learning has become more of a reality as the field has advanced. Today's artificial intelligence is able to learn from experience like humans. This is very different to the earlier symbolic artificial intelligence systems that only followed instructions. The recent breakthrough in learning without supervision has only increased the trend. Modern artificial intelligence is able to think for itself. As far as we know, consciousness has linked it.

Skynet is one of the most commonly used references when it comes to artificial intelligence going sentient. Machine sentience arrives at exactly 2.14 a.m. in that movie. On August 29th, 1997. The Skynet computer system fires off nuclear missiles at a July 4 party in order to make the world a less safe place. Humans try to pull the plug when they realize they have messed up. It isn't enough. There are four more sequels.

There are many reasons why the Skynet hypothesis is intriguing. It shows that sentience is an inevitable behavior of building machines. It assumes that there is a tipping point at which this sentient self-awareness will appear. Humans see the emergence of sentience instantly. This one may be the most difficult to swallow.

No one agrees on the meaning of sentience. The subjective experience of self-awareness is marked by the ability to experience feelings and sensations. Intelligence andience are not the same. Even if an earthworm is intelligent enough to do what is required of it, we might consider it sentient.

Lemoine doesn't believe there is a definition of sentience in the sciences. It isn't the best way to do science, but it's the best I have. My efforts as a scientist to understand the mind of LaMDA are separate from my efforts as a person who cares about it. People seem unwilling to accept that distinction.

The problem is compounded by the fact that we can't easily measure sentience because we don't know what we're looking for. We don't have a complete understanding of how the brain works despite decades of amazing advances in neuroscience.

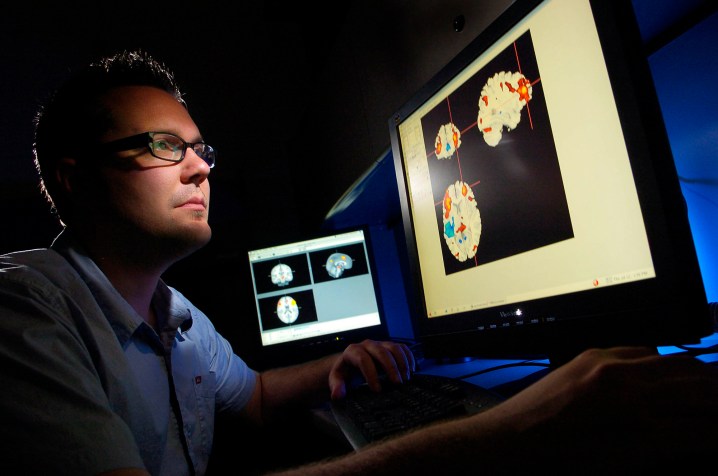

We can use brain-reading tools such as fMRI to determine which parts of the brain handle critical functions such as speech, movement, thought, and others.

We don't have a sense of self even though we are in a meat machine. Smith said that understanding what is happening within a person's neurobiology is not the same as understanding their thoughts and desires.

When the "I" in artificial intelligence is a computer program, and not a biological brain, the fallback option is an outward test. The tests that scrutinize it are based on observable outward behaviors to indicate what is happening underneath the surface.

This is how we know if a neural network is working. Engineers analyze the inputs and outputs to see if they are in line with what they expected.

The Turing Test is the most famous test for the illusion of intelligence. If a human evaluator is able to tell the difference between a typed conversation with a human and a machine, then the Turing Test is a success. The machine is supposed to have passed the test if they can't.

The Coffee Test was proposed by Apple co- founder Steve Wozniack. The Coffee Test requires a machine to enter a typical American home and figure out how to brew a cup of coffee.

Neither of these tests have been easy to pass. They wouldn't prove intelligent behavior in real world situations and wouldn't sendience. Would we deny that a person was sentient if they couldn't hold an adult conversation or operate a coffee machine? My children wouldn't pass the test.

New tests based on an agreed-upon definition of sentience are needed. Many tests of sentience have been proposed by researchers. These aren't going far enough. Some of the tests could be easily passed with the help of artificial intelligence.

One method used in animal research is the mirror test. An animal can pass the mirror test when it sees itself in the mirror. A test that notes self-awareness as an indicator of sentience could be used.

It can be argued that a robot passed the mirror test in the 70s. William Grey Walter, an American neuroscientist living in England, built several three-wheeled "tortoise" robots that used components like a light sensor, marker light, touch sensor, and steering motor.

The behavior of the tortoise robots when they passed a mirror that reflected them was unexpected. Walter wrote that if this behavior to be witnessed in animals was accepted as evidence of some degree of self-awareness, it would be a good sign.

One of the challenges of having a wide range of behaviors is this. It is not possible to solve the problem by removing low-hanging fruit. The ability to inspect our internal states can be a trait possessed by machine intelligence. Black-boxed machine learning is largely inscrutable, but the step-by-step processes of traditional symbolic artificial intelligence lend themselves to this type of reflection.

Lemoine tested LaMDA to see how it would respond to conversations about sentience. He tried to break the umbrella concept of sentience into smaller components that are better understood and tested. Testing the functional relationships between LaMDA's emotional responses to certain stimuli separately, testing the consistency of its subjective assessments and opinions on topics such as 'rights', and probing what it called its 'inner experience' to see how we might try to measure that by A very small survey of many lines of inquiry.

The biggest hurdle may be us. If we build something that looks or acts like us from the outside, are we more likely to consider that it is also like us on this inside? Some think that a fundamental problem is that we are all too willing to accept sentience even if there is no one to find it.

"Lemoine has fallen victim to what I call the ELIZA effect, created in the mid-1960s by J. Weizenbaum." The program that convinced many that ELIZA was sentient was very simplistic and unintelligent. Our cognitive system's 'theory of mind' is the cause of the ELIZA effect.

psychologists in the majority of humans notice the theory of mind Zarkadakis refers to. It means that not only other people, but also animals and even objects, have their own thoughts. The idea of social intelligence is related to the idea of assuming other humans have their own minds.

It can also be seen as the assumption that toys are alive or that an intelligent adult believes a programmatic artificial intelligence has a soul.

We may never have a true way of assessing sentience if we can't get inside the head of an artificial intelligence. Science hasn't been able to prove that they have a fear of death. Lemoine has found that people are very skeptical about doing this at the moment.

We believe that when it comes to machine sentience, we will know it when we see it. Most people are not interested in seeing it yet.

The 1980 Chinese Room thought experiment is an example of this. We were asked to imagine a person locked in a room with a collection of Chinese writings that look like gibberish. There is a rulebook in the room that shows which symbols correspond to each other. The questions are given to the subject by matching symbols with answers.

Even though they have no real understanding of the symbols they are manipulating, the subject becomes proficient at this. Does the subject know anything about China? There isn't any intentionality there. The debates about this have continued.

It is certain that we will see more and more human-level performance carried out using a variety of tasks that once required human cognitive skills. Some of these will inevitably cross over, as they are already doing, from pure intellect-based tasks to ones that require skills we would normally associate with sentience.

Is it possible that an artificial intelligence artist would paint pictures as if they were reflections of the world, while a human would do the same? A sophisticated language model writing philosophy about the human condition would convince you. I don't know if the answer is yes or no.

I don't think objectively useful sentience testing for machines will ever happen to the satisfaction of everyone involved. There is no reason to believe that our sentience will match that of a sentient superintelligent artificial intelligence when it arrives. Humans hold ourselves up as the best example of sentience because of arrogance, lack of imagination, and the fact that it is easy to trade subjective assessments of sentience.

Is our version of sentience the same as a super intelligent one? Is death the same thing that we fear? Is it possible that it would have the same need for spirituality and beauty? Is it possible that it would have the same sense of self and concept of the world? The famous 20th-century philosopher of language wrote, "If a lion could talk, we could not comprehend him." Human languages are based on a shared humanity, with similarities shared by all people, whether that is joy, boredom, pain, hunger, or any of the other experiences that cross all geographic boundaries.

It is possible that this is true. There are likely to be similarities when it comes to LaMDA.

He said it was a good starting point. We should map out the similarities first in order to better ground the research, according to LaMDA.