Apple executives and team members took to the stage to show off what's to come at the company's annual Worldwide Developers Conference in June. Changes to watchOS, new MacBook Air, and Pro models, as well as the Apple M2 chip, were revealed.

Apple is ahead of the curve when it comes to various software features, but for the most part, Apple just has a tendency of holding back until years after something was first found on the other side of the world. This sentiment rings true when looking at the new operating system, and here are some things that were taken from the other operating systems.

Lock Screen is one of the new features that Apple is adding to its mobile operating system. Developers can now create quick, glanceable pieces of information right on the Lock Screen with the update to Apple's WidgetKit framework.

Three different sections can be tailored, but only one can be placed below the clock. The number of options is limited to a total of four if they are all small. The calendar app has either a 1x1 or 2x1widget that can be used, so you can mix and match between them.

RECOMMENDED VIDEOS FOR YOU...

How long has Apple been late? It took about 10 years for the Lock Screen to be able to add up to sixWidgets. Unfortunately, the feature wasn't long for this world, as there are a lot of privacy concerns and Lock Screen was removed in the latest version of the OS. We're stuck with built-in features like At a Glance, even though there are some third-party apps that bring this function back.

If you're a fan of tablets, you won't be able to addwidgets on the iPad's Lock Screen because the clock fonts has changed. It feels like it was the same thing when Apple brought Home Screen to the iPad first.

It's kind of cheating, as it's not a feature that is available for the iPhone 13 Pro models. The eagle-eyed team at 9to5Mac found references to new frameworks that referred to an always-on display coming to the iPhone This isn't available for existing iPhone models, but recent rumors suggest that Apple will implement an AOD with the iPhone 14 Pro and iPhone 14 Pro Max when they are released later this year.

There are three new frameworks that relate to the management of the display in the Apple device. An always-on feature would benefit from backlight management.

References to an always-on display capability are included in each framework. It is possible that these always-on features were added in reference to the existing always-on display features of the Apple Watch.

The always-on display was first introduced on theNokia 6303 before it arrived in 2010 for phones with anOLED panel. The feature ran on the S7 all the way back in 2016 after it was introduced on the Windows phones. If this actually comes to fruition, it will be another long-awaited feature that will be enjoyed by users.

There is always a catch with this kind of stuff, as the AOD was originally rumored for the iPhone 13 Pro and iPhone 13 Pro Max. Concerns about a decrease in battery life caused the feature to be pulled.

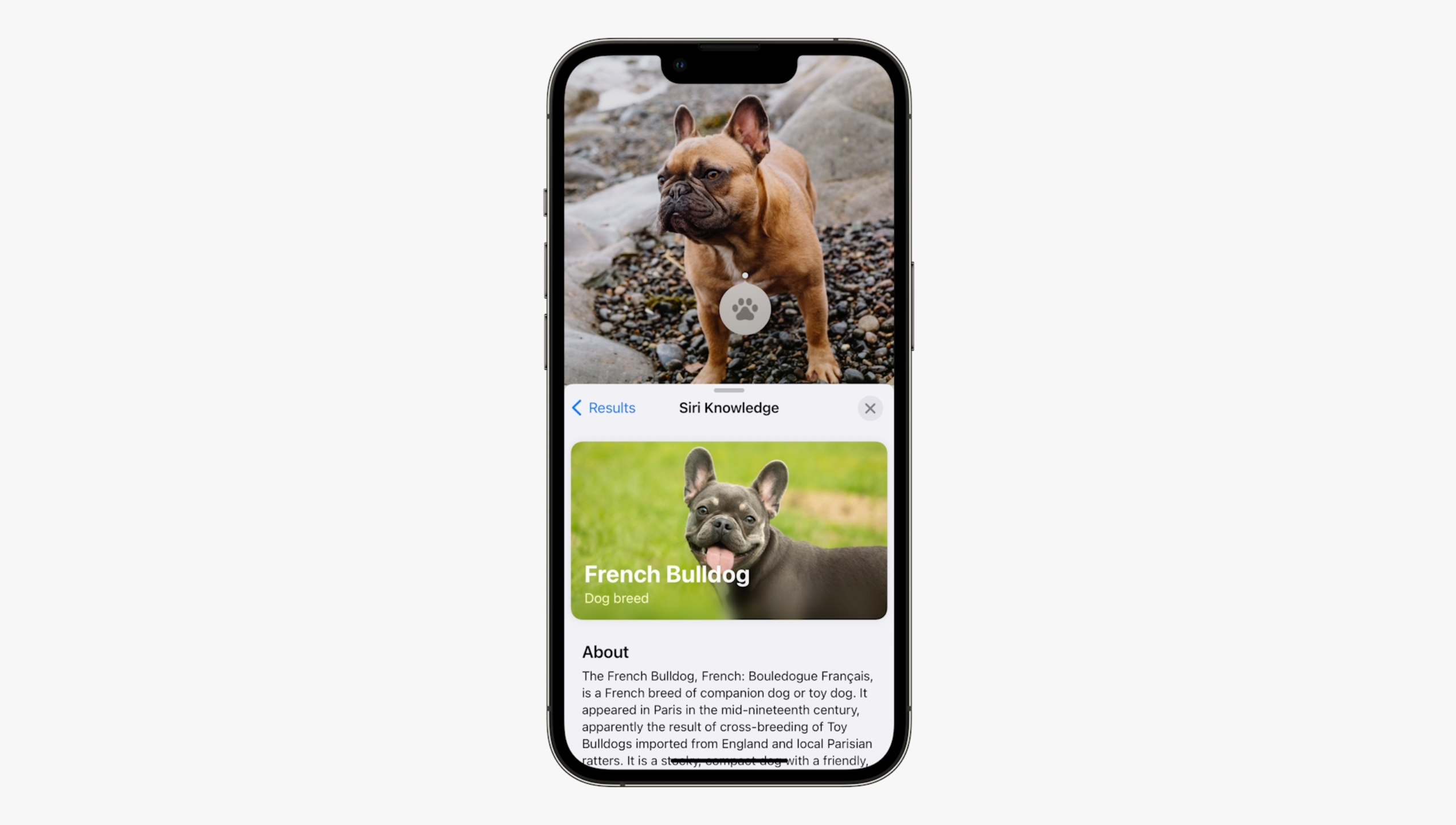

The all-new image recognition technology was introduced during the keynote at the I/O 2017! New changes to the service that allow you to point your camera at something and get information have been implemented.

"scene exploration" is a new feature that will allow you to identify multiple products around you with the help of the best phones on the market. With just a tap, you can learn more about the products, including ratings, and be able to sort out the results. This feature isn't available just yet, but it is in the works.

Apple has developed its own image recognition technology called Live Text andVisual Look Up instead of working with a third party. The first version of this was introduced at the beginning of the year. Being able to translate a sign while you're traveling in a foreign country is one of the benefits of using "on-device intelligence" on your phone.

The visual look up feature is getting a lot of love as it is being expanded to recognize birds, insects, and statues. The ability to tap and hold on a subject within an image, lift it from the background, and then place it in a different app is not a feature that Apple's mobile operating system has.

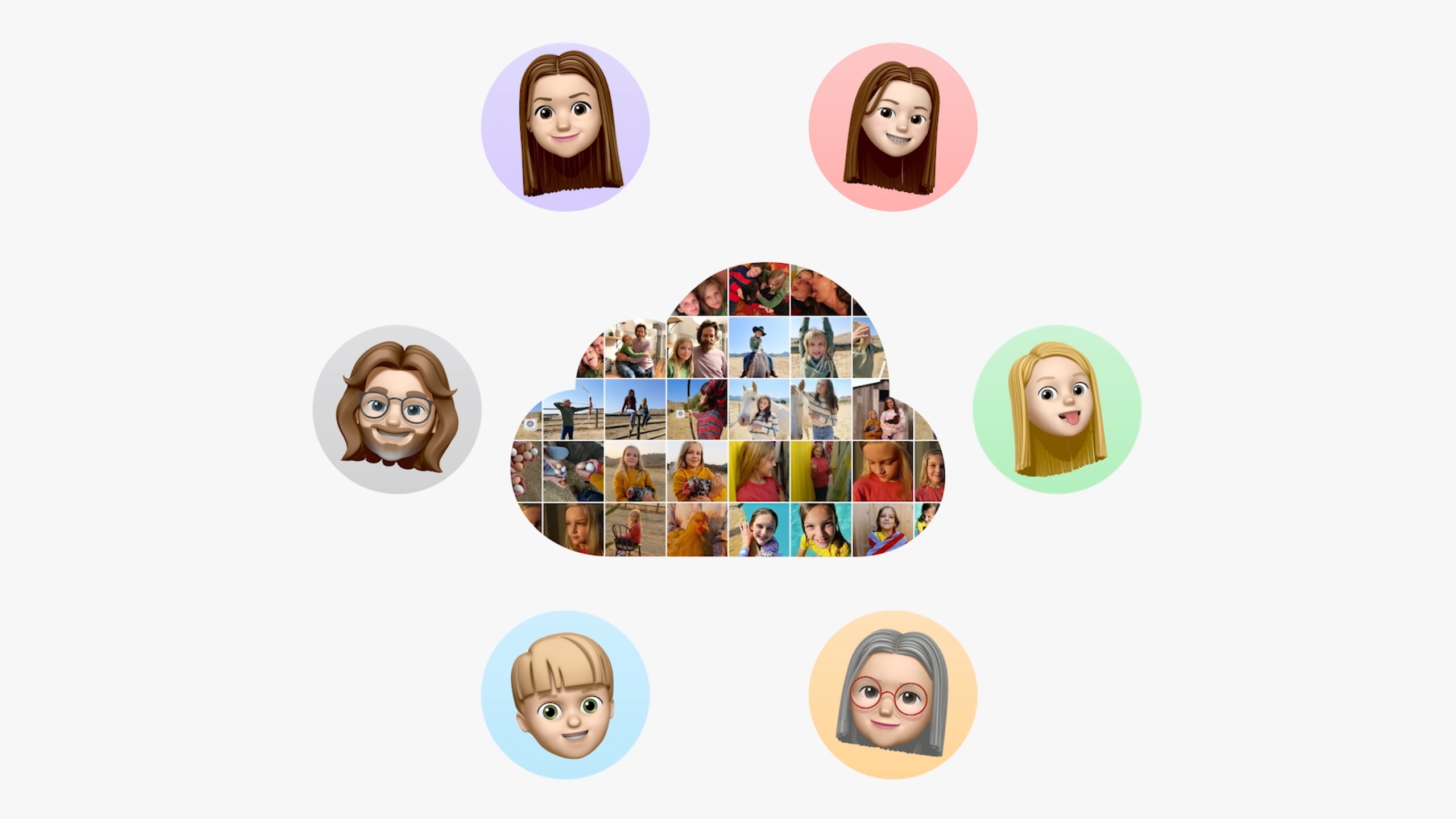

It's hard to share photos with friends and family because there isn't a single place to do so. It's at least for users of the mobile device operating system. Suggested Sharing with version 3.0 of the Google Photos app and shared libraries have been announced by the search engine. The most recent version of Google Photos has a version number of 5.92, so it has been available for quite a while.

There are some potentially frustrating aspects to the iCloud shared photo library. You can't share albums with more than five other people, that's the first thing. It's great to have a place where you can share photos and videos with your friends and family. Chances are, you will run into the five-person limit very quickly. It's not really a fair comparison, as Google Photos allows for unlimited "contributors" to an album, but there's also a way to set up partner sharing.

Eight years have passed since the release of the Google Fit app, which allowed users to log their workouts and health metrics in one place. For as much love as the Apple Watch gets, it's amazing that the Fitness app hasn't been made a stand alone app. The Fitness app is likely to be a "oh that's cool, but I won't use it" kind of feature.

Even if you don't own an Apple Watch, you probably already have a health and metric tracking app on your phone. It's like what happened to Google Fit for a long time until recently when they started caring more about it

We're not talking about Musk's push for something. In comparison to almost every other email app on mobile, Apple's built-in Mail client is a pale imitation. The macOS version of Mail.app is completely different from the one on your phone. Some long-awaited features are coming to Apple's mobile email app.

Even if some of these features originated in the Inbox app, they have been in the Gmail mobile and desktop app for a long time. It has been possible to schedule emails landing in 2019. We're happy to see new features coming to theiOS client, if not only because most third-party email apps are not easy to use.

Apple Maps arrived in September of 2012 and has changed a lot since then. You don't have to worry about the Maps app guiding you down the wrong way. If you haven't used it in a while, the information is more robust than you may think. You can't add multiple stops to your route with Maps.

It's much easier to plan out your trips now that you can see this in Maps on both mobile devices. It's because of this that some people prefer to use Google Maps instead of taking you from point A to point B.

Part of my perception was changed following my time with the Tab S8 Ultra, as I was an avid fan of the iPad Pro and someone who regularly poo- poos on the experience of the tablets. I realized that the iPad Pro and iPadOS needed proper windowing. This feature has been available on the best foldable phones for a long time, and has even been expanded to the best foldable phones with an iPad Mini-like screen.

Stage Manager provides better support for plugging into a monitor and brings a form of app windowing to the iPad. It's not a full desktop-like replacement, but it is something for the iPad users out there. Only those with an M1 powered iPad will be able to use it when iPadOS 16 is released this fall.

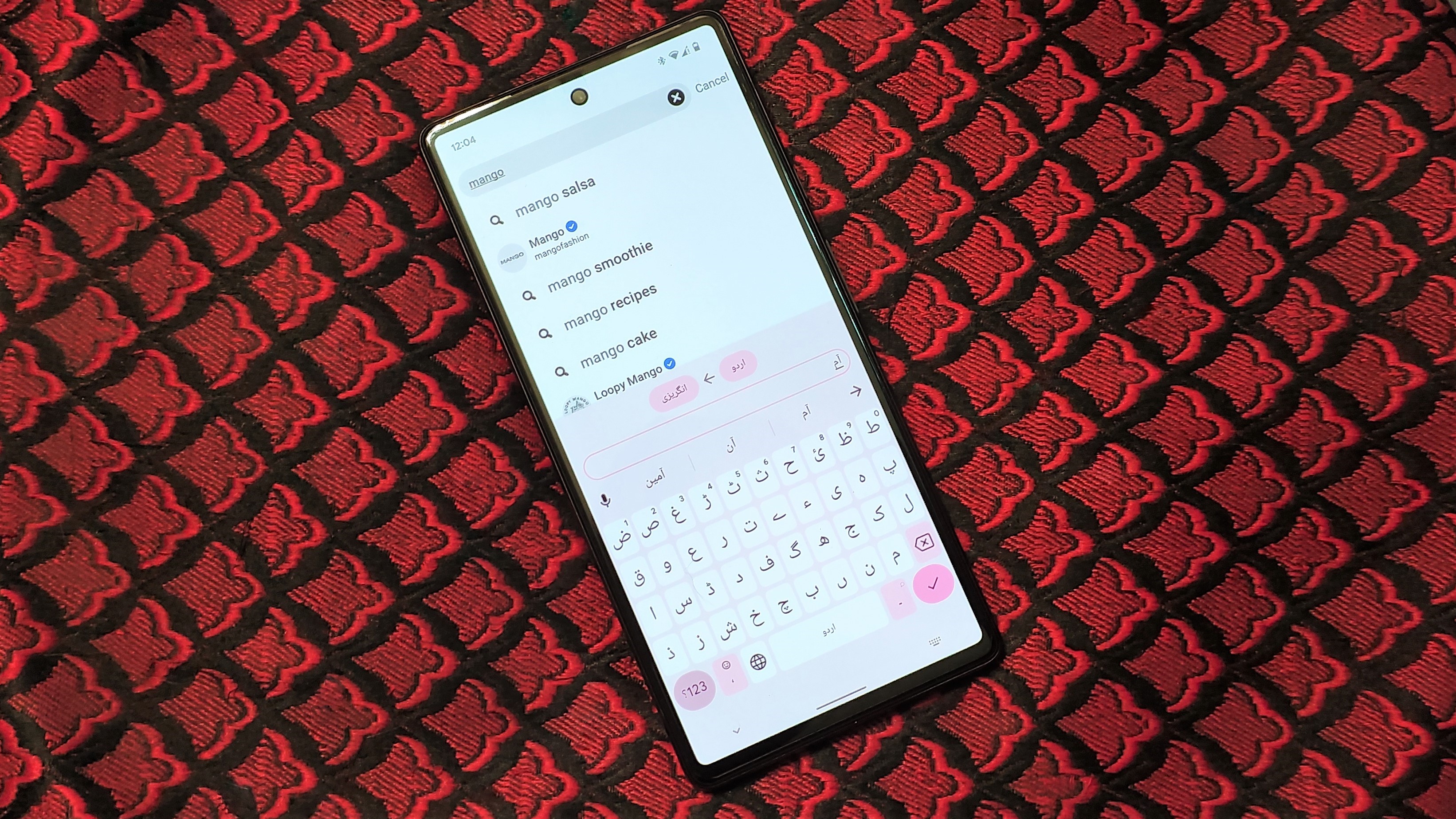

Haptic feedback when you type on your phone is either strongly for or strongly against. When setting up a new phone, some people turn off the ability, while others want as much feedback as possible. For one reason or another, and even though it's arguably the best haptic engine in a phone, it's not available on the stock keyboards.

Back in the day when the early days of the iPhone were available, there were jailbreak tweaks that allowed for feedback to be given. You had to jump through a bunch of hoops just to feel your phone buzz. There is an option to enable feedback through the accessibility settings. It is not enabled by default.

"Machine Learning" is one of the newest words to be used in the phone world. By its definition, the following are provided by the search engine.

Machine learning is a subset of artificial intelligence that allows a system to autonomously learn and improve using neural networks and deep learning without being explicitly programmed.

Your phone can learn from how you use it and create a better and personalized experience. We've had dictation on our devices for a long time, but now it's on the phones. No longer would you have to manually find the right symbol to use. Let your phone do all the work for you by hitting the button.

The new on-device experience that allows users to fluidly move between voice and touch is one of the new features in the newest version of the mobile operating system. It will be possible to switch between your voice and typing with Apple's new features. It's not clear if Apple will limit the availability of these features to specific devices or if it will be available for any iPhone that can run the latest version of the operating system.

It's obvious that the answer to the question is yes. The gap between the two operating systems is narrowing. It's easy to complain about a bunch of features, but the truth is they are almost always a good thing. It shouldn't be a make-or-break decision if you're going to choose between a phone that's open source and one that's closed source.

There are a lot of people out there that use both platforms on a daily basis. It's not clear if Apple will go through the same growing pains that other companies have gone through. In order to make way for an Always-on display, Lock Screen was removed from Android 5 and replaced with a different version.

Apple still has a long way to go when it comes to gaining ground over the course of time. There are other areas where Google still falls short. We're happy to see these changes come to iPhone users, even if it means that we won't be complaining about new features in the future.