The ability of DALL-E 2 to draw/paint/imagine just about anything is something that the artificial intelligence world is still figuring out how to deal with. The model it is working on is even better than the one it has been working on.

Let's slow down and unpack that real quick because Imagen is a text-to-image generator built on large transformer language models.

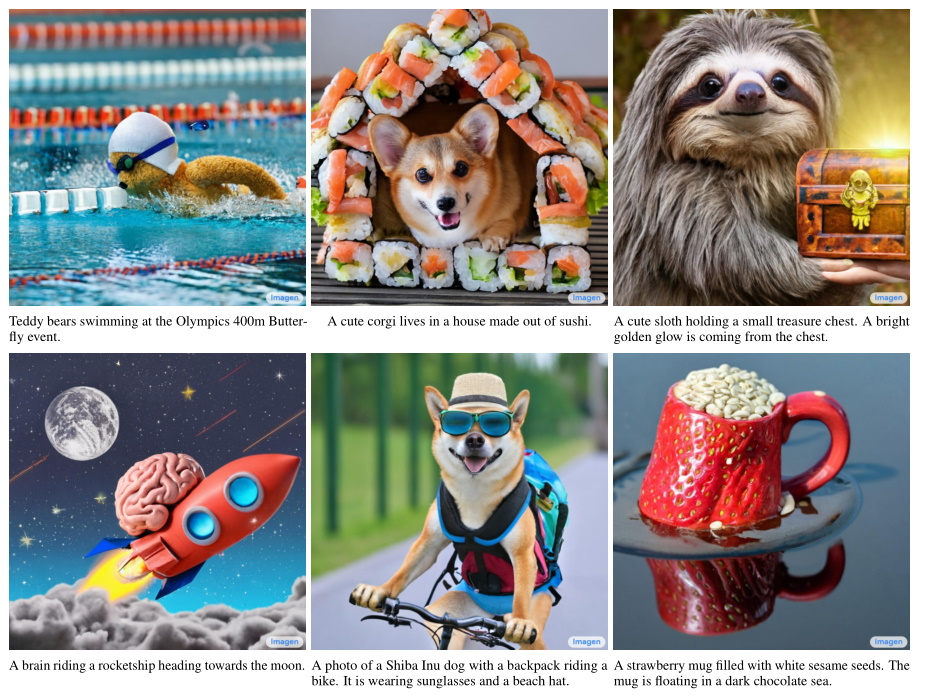

Text-to-image models take text inputs like a dog on a bike and produce a corresponding image, something that has been done for years but has seen huge jumps in quality and accessibility.

Part of that is using diffusion techniques, which basically start with a pure noise image and slowly refine it bit by bit until the model thinks it can make it look more like a dog on a bike than it already does. This was an improvement over top-to-bottom generators that could get it wrong on first guess, and others that could easily be led astray.

The other part is improved language understanding through large language models using the transformer approach, the technical aspects of which I won't get into here, but it and a few other recent advances have led to convincing language models.

The image is from research done by the internet company, Google.

The imagen starts by generating a small image and then passes on two super resolution images to bring it up to1024 This isn't like normal upscaling, as it uses the original image as a basis to create new details in harmony with the smaller one.

If you have a dog on a bike and it has a small eye in the first image, you might want to look at it differently. Not much room for expression. On the second image, it's 12. Where does the detail come from? The artificial intelligence knows what a dog's eye looks like, so it draws more detail. This happens again when the eye is done again, but at a smaller size. At no point did the artificial intelligence have to pull 48 by the dog eye. It started with a rough sketch, filled it out in a study, and then went to town on the final canvas.

This isn't new, and in fact artists working with artificial intelligence use this technique to create pieces that are larger than what the machine can handle. If you split a canvas into several pieces and super-resolution all of them separately, you end up with something much larger and more intricately detailed. An example from an artist.

The previously posted image is a whopping 24576 x 11264 pixels. There is no upscaling. In fact, I went far past @letsenhance_io's limits.

The image is what I call "3rd generation" (pun intended), w/ its 420 slices regenerated from a previous image already regen'd once.

2/10 pic.twitter.com/QG2ZcccQma

— dilkROM Glitches (@dilkROMGlitches) May 17, 2022

According to researchers from the company, the advances they claim with Imagen are several. They say that existing text models can be used for the text portion, and that their quality is more important than simply increasing visual fidelity. It makes sense since a detailed picture of nonsense is worse than a slightly less detailed picture of exactly what you asked for.

In the paper describing Imagen, they compare results with DALL-E 2 doing a panda making latte art. Neither was able to show the opposite in all of the attempts. It is a work in progress.

The image is from research done by the internet company, Google.

In tests of human evaluation, Imagen came out on top, both on accuracy and fidelity. This is quite subjective, but to even match the perceived quality of DALL-E 2, which until today was considered a huge leap ahead of everything else, is pretty impressive. I will only add that none of these images from any generator will last more than a cursory look before people notice they are generated or have serious suspicions.

OpenAI is a step or two ahead of the internet giant. DALL-E 2 is more than a research paper, it is a private alpha with people using it. The fabulously profitable internet giant has yet to attempt productizing its text-to-image research, while the company with "open" in its name has focused on productizing its research.

OpenAI’s new DALL-E model draws anything — but bigger, better and faster than before

It's clear from the choice DALL-E 2's researchers made that they wanted to remove any content that might violate their own guidelines. If the model tried, it couldn't make something like that. Some large datasets known to include inappropriate material were used by the team. The researchers write aboutLimitations and Societal Impact in an insightful section on the imagen site.

Downstream applications of text-to-image models are varied and may impact society in complex ways. The potential risks of misuse raise concerns regarding responsible open-sourcing of code and demos. At this time we have decided not to release code or a public demo.

The data requirements of text-to-image models have led researchers to rely heavily on large, mostly uncurated, web-scraped datasets. While this approach has enabled rapid algorithmic advances in recent years, datasets of this nature often reflect social stereotypes, oppressive viewpoints, and derogatory, or otherwise harmful, associations to marginalized identity groups. While a subset of our training data was filtered to remove noise and undesirable content, such as pornographic imagery and toxic language, we also utilized LAION-400M dataset which is known to contain a wide range of inappropriate content including pornographic imagery, racist slurs, and harmful social stereotypes. Imagen relies on text encoders trained on uncurated web-scale data, and thus inherits the social biases and limitations of large language models. As such, there is a risk that Imagen has encoded harmful stereotypes and representations, which guides our decision to not release Imagen for public use without further safeguards in place

Some people might say that Google is afraid of its artificial intelligence not being politically correct, but that is an uncharitable and short-sighted view. An artificial intelligence model is only as good as the data it is trained on, and not every team can spend the time and effort it might take to remove the really awful stuff these scrapers pick up as they assemble multi-million-images or multi-billion-word datasets.

The research process exposes how the systems work and provides an unfettered testing ground for identifying these and other limitations. How else would we know that an artificial intelligence can draw hair styles common among Black people? When asked to write stories about work environments, the artificial intelligence makes the boss a man? An artificial intelligence model is working perfectly and has successfully learned the biases of the media it is trained to ignore. Not like people!

Unlearning systemic bias is a lifelong project for many humans, but an artificial intelligence can remove the content that caused it to behave badly in the first place. There will be a need for an artificial intelligence to write in the style of a racist, sexist pundit in the future, but for now the benefits are small and the risks large.

Like the others, Imagen is still in the experimental phase, not ready to be employed in anything other than a strictly human-supervised manner. I'm sure we'll learn more about how and why it works when the capabilities are more accessible.

When big AI labs refuse to open source their models, the community steps in