The ability to understand and learn any task that a human can is what the ultimate achievement is for some in the artificial intelligence industry. It has been suggested that AGI would bring about systems with the ability to reason, plan, learn, represent knowledge, and communicate in natural language.

AGI is not a realistic goal for every expert. The release of an artificial intelligence system called Gato by DeepMind could be seen as a move towards it.

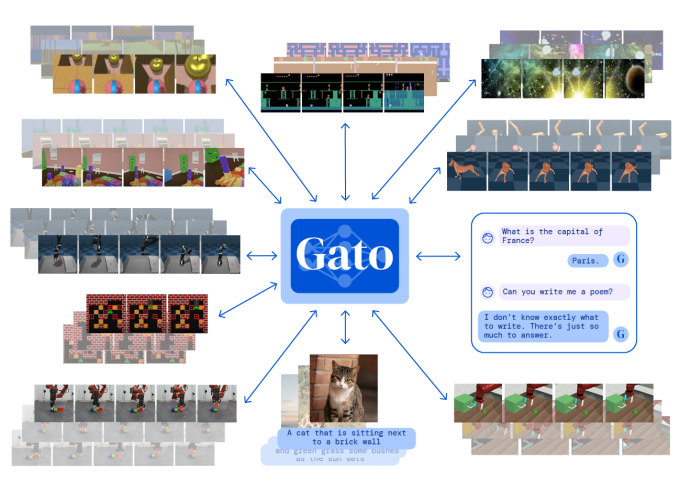

Gato is a system that can be taught to perform many different types of tasks. Gato was trained by researchers at DeepMind to complete 604, which included engaging in dialogue, stacking blocks with a real robot arm, and playing Atari games.

Jack Hessel is a research scientist at the Allen Institute for Artificial Intelligence. For example, a system called MUM, which can handle text, images, and videos, was recently used by the search engine to perform tasks from finding interlingual variations in the spelling of a word to relating a search query to an image. The training method and the diversity of the tasks that are tackled could be newer.

DeepMind has a Gato architecture.

We have seen evidence that single models can handle a wide range of inputs. If I detect task A as an input, I will use subnetwork A. If training A and B leads to improvements for either or both, things are more exciting.

Gato learned by example how to ingest billions of words, images from real-world and simulation environments, button presses, and more in the form of token. Gato was able to understand the data in a way that he could, for example, tease out the mechanics of Breakout, or which combination of words in a sentence might make sense.

Gato doesn't do these tasks well. When chatting with a person, the system often responds with a superficial or incorrect reply, such as "What is the capital of France?" Gato misgenders people. The system stacks blocks using a real-world robot only 60% of the time.

Gato performs better than an expert on 450 of the 604 tasks, according to DeepMind.

Matthew Guzdial is an assistant and said that Gat is a big deal. There are benefits to the general models in terms of their performance on tasks outside of their training data, but I am more in the camp of many small models being more useful.

Gato isn't dramatically different from many of the artificial intelligence systems in production today. The Transformer has become the architecture of choice for complex reasoning tasks due to its similarities to OpenAI's GPT-3.

Gato learned a lot of tasks.

Gato is orders of magnitude smaller than GPT-3 in terms of the parameters. The skill of the system on a problem is defined by the parameters of the system learned from training data. Gato has less than 1 billion, while GPT3 has more than 170 billion.

The system could control a robot arm in real time if Gato was small. If scaled up, Gato could tackle any task, behavior, or embodiment of interest.

Gato's inability to learn continuously is one of the hurdles that would have to be overcome if this is the case. Gato's knowledge of the world is grounded in the training data, like most Transformer-based systems. Gato would respond wrong if you asked him a date-sensitive question.

The amount of information the system can remember in the context of a given task is one of the limitations of the Transformer and Gato. Even the best Transformer-based language models can't write a long essay without losing track of the plot. The forgetting happens in any task, whether writing or controlling a robot, which is why some experts have called it the "Achilles" of machine learning.

Mike Cook is a member of the Knives and Paintbrushes research collective and cautions against assuming Gato is a path to general-purpose artificial intelligence.

I think the result is open to interpretation. It sounds exciting that the artificial intelligence is able to do many different tasks that sound different, but to us it sounds like controlling a robot is different to writing text. Cook told TechCrunch that this isn't all that different from GPT-3 understanding the difference between English text and Python code. This isn't easy, but to the outside observer, it sounds like the artificial intelligence can learn another ten or fifty tasks and make a cup of tea. Current approaches to large-scale modelling allow it to learn multiple tasks at once. I think it's a nice bit of work, but it doesn't strike me as a major stepping stone on the way to anything.