Machine learning and artificial intelligence research is too much for anyone to read. The Perceptron column aims to collect some of the most relevant recent discoveries and papers and explain why they matter.

A new study shows how bias can start with instructions given to people who are recruited to look at data. The annotators pick up on patterns in the instructions, which condition them to contribute annotations that become over-represented in the data.

To make sense of images, videos, text, and audio from examples that have been labeled by annotators is what many artificial intelligence systems do today. The systems can use the labels to see the relationships between the examples and the data they haven't seen before.

This works well. Annotators bring biases to the table that can bleed into the trained system. Studies show that the average annotator is more likely to label phrases in African-American Vernacular English (AAVE) as toxic than they are in other languages.

It turns out that annotators might not be solely to blame for the presence of bias in training labels. Researchers from Arizona State University and the Allen Institute for Artificial Intelligence looked into whether instructions written for data set creators to serve as guides for annotators might be a source of bias. The instructions usually include a description of the task along with several examples.

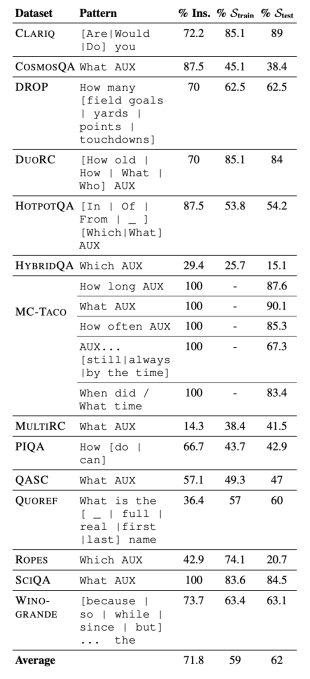

Parmar et al.

The data sets used to measure the performance of natural language processing systems were looked at by the researchers. They found that the instructions given to the annotators influenced them to follow specific patterns, which then spread to the data sets. Over half of the annotations in Quoref start with the phrase "What is the name?", which is designed to test the ability of artificial intelligence to understand when two or more expressions refer to the same person.

It suggests that systems trained on biased instruction/annotation data might not perform as well as initially thought. The authors found that instruction bias can affect the performance of systems and that systems often fail to generalize beyond instruction patterns.

The silver lining is that large systems were less sensitive to instruction bias. The research shows that people are susceptible to developing biases from sources that aren't always obvious. The challenge is to find and mitigate the sources.

Scientists from Switzerland concluded that facial recognition systems aren't easily fooled by realistic faces. The authors created a Morphs that was tested against four state-of-the-art facial recognition systems. They claimed that the morphs did not post a significant threat.

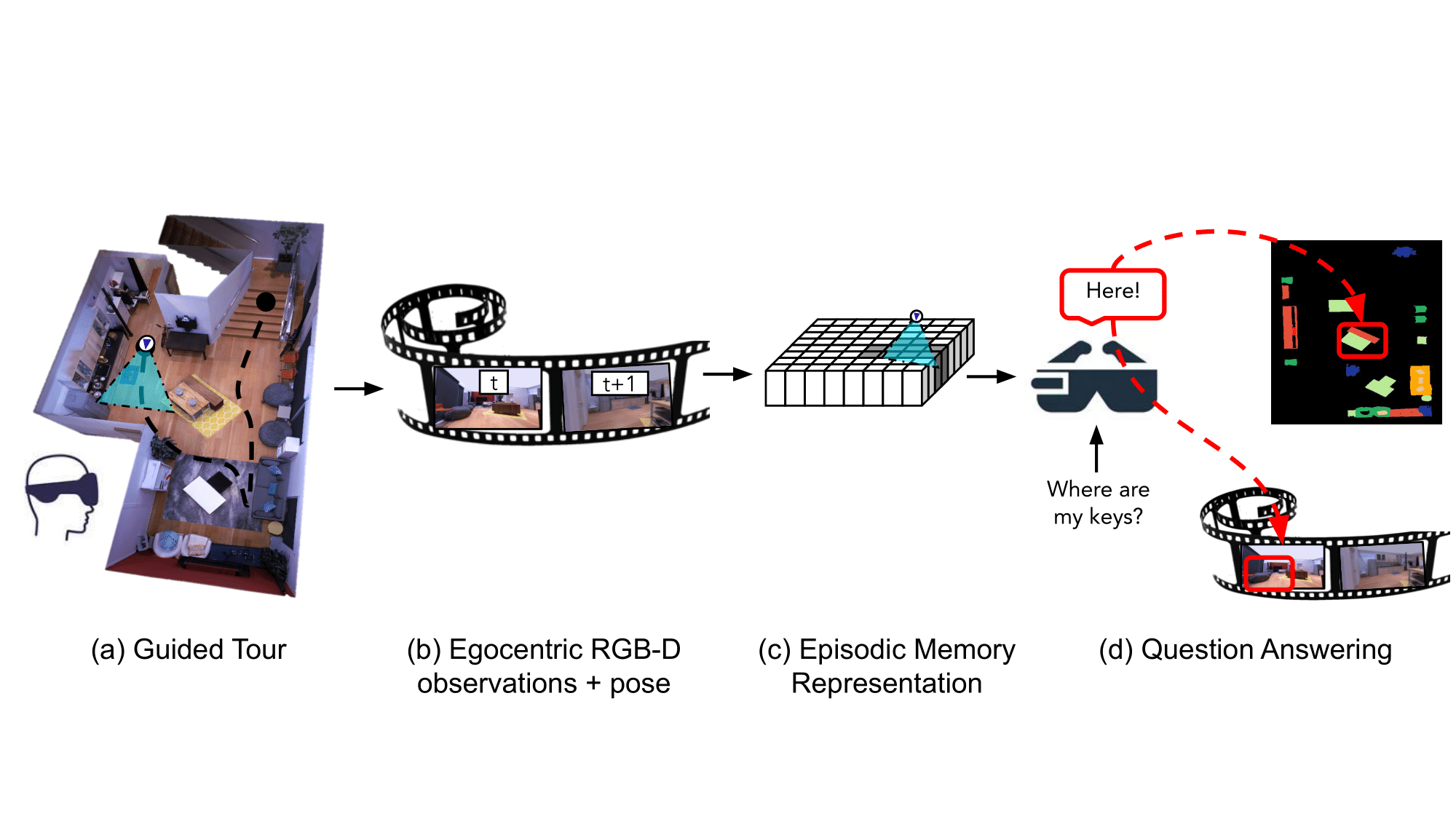

Researchers at Meta developed an artificial intelligence that can remember the location and context of objects to answer questions. The work is part of Meta's Project Nazare initiative to develop augmented reality glasses that use artificial intelligence to analyze their surroundings.

Meta is the image credit.

The system is designed to be used on any body-worn device that has a camera. The system can tell if the keys were on a side table in the living room.

Last October, Meta launched Ego4D, a long-term, ego-centered perception, which it said was part of its plans to release fully-featured augmented reality glasses in 2024. The company said at the time that it wanted to teach the systems to understand social cues, how the wearer's actions might affect their surroundings, and how hands interact with objects.

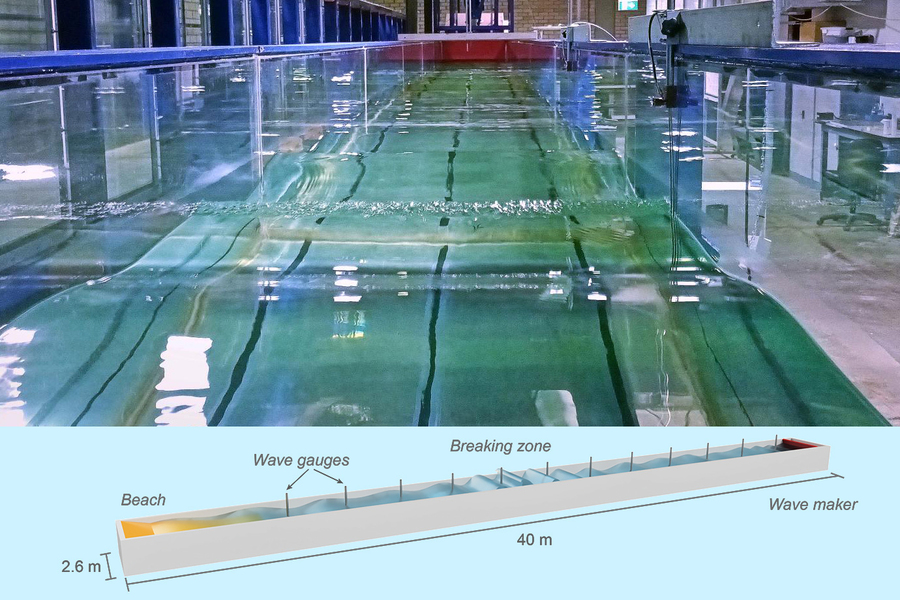

An artificial intelligence model has been used in an MIT study of waves. Waves are used for building structures in and near the water and for modeling how the ocean interacts with the atmosphere in climate models.

The image is from MIT.

The researchers trained a machine learning model on hundreds of wave instances in a 40-foot tank of water. By observing the waves and making predictions based on empirical evidence, then comparing that to the theoretical models, the artificial intelligence helped in showing where the models fell short.

Thibault Asselborn's PhD thesis on handwriting analysis turned into a full-blown educational app, which is why a startup is being born out of research at EPFL. The app can identify habits and corrective measures with just 30 seconds of a kid writing on an iPad with a stylus. These are presented to the kid in the form of games that teach them good habits.

The scientific model and rigor are what set us apart. Students come before class to practice.

The image is from Duke University.

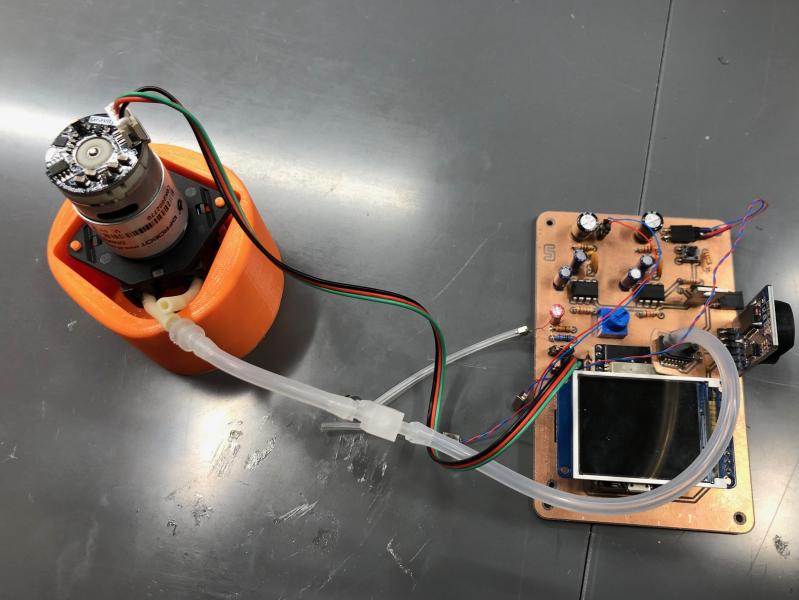

Hearing problems can be identified during routine screenings in elementary schools. Some readers may remember that these screenings use a device called a tympanometer, which must be operated by trained audiologists. In an isolated school district, kids with hearing problems may never get the help they need if one is not available.

The tympanometer that was built by the Duke team was sent data to a phone app where it was interpreted by an artificial intelligence model. The child can receive further screening if anything is worrying. It's not a replacement for an expert, but it's a lot better than nothing and may help identify hearing problems much earlier in places without the proper resources.