Scientists use models trained with machine learning to solve problems. How do we know if the solutions are trustworthy when the models are not able to explain their decisions to humans?

Machine learning is used to sort through millions of potentially toxic compounds to determine which might be safe candidates for pharmaceutical drugs.

There have been some high-profile accidents in computer science where a model could predict things quite well, but the predictions weren't based on anything meaningful, according to Andrew White, associate professor of chemical engineering at the University of Rochester.

White and his lab have developed a new method that can be used with any machine learning model to better understand how the model arrived at a conclusion.

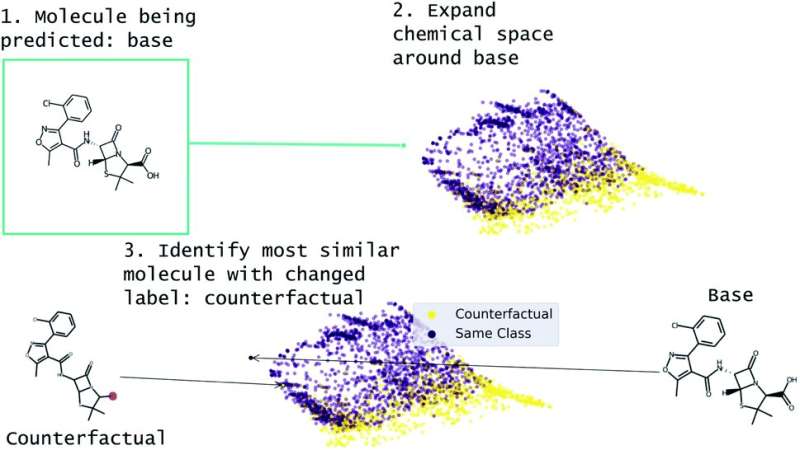

The smallest change to the features that would alter the prediction is what counterfactuals can tell researchers.

Counterfactuals can help researchers determine if a model is valid.

The paper shows how the new method, called MMACE, can be used to explain why.

The lab had to overcome a lot of challenges. They needed a method that could be adapted for a wide range of machine-learning methods. It was difficult to find the most similar molecule for any given scenario because of the number of possible candidate molecules.

The group was helped solve the problem by the suggestion of Aditi Seshadri in White's lab. The fuel for counterfactual generation is generated by STONED. Seshadri is an undergrad researcher in White's lab and was able to help on the project through a Rochester summer research program.

White says his team is trying other databases to find more similar molecules and refining the definition of molecular similarity.

More information: Geemi P. Wellawatte et al, Model agnostic generation of counterfactual explanations for molecules, Chemical Science (2022). DOI: 10.1039/D1SC05259D Journal information: Chemical Science Citation: Using 'counterfactuals' to verify predictions of drug safety (2022, May 2) retrieved 2 May 2022 from https://phys.org/news/2022-05-counterfactuals-drug-safety.html This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without the written permission. The content is provided for information purposes only.